Back to all publications...

Evaluating Uncertainty Quantification in End-to-End Autonomous Driving Control

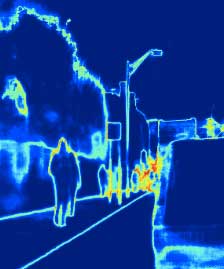

Self-driving has benefited from significant performance improvements with the rise of deep learning, with millions of miles having been driven with no human intervention. Despite this, crashes and erroneous behaviours still occur, in part due to the complexity of verifying the correctness of DNNs and a lack of safety guarantees. In this paper, we demonstrate how quantitative measures of uncertainty can be extracted in real-time, and their quality evaluated in end-to-end controllers for self-driving cars. We propose evaluation techniques for the uncertainty on two separate architectures which use the uncertainty to predict crashes up to five seconds in advance. We find that mutual information, a measure of uncertainty in classification networks, is a promising indicator of forthcoming crashes.

Rhiannon Michelmore, Marta Kwiatkowska, Yarin Gal

In submission

[arXiv] [BibTex]