Back to all publications...

Wat heb je gezegd? Detecting Out-of-Distribution Translations with Variational Transformers

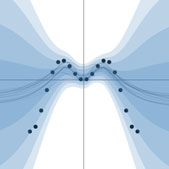

We use epistemic uncertainty to detect out-of-training-distribution sentences in Neural Machine Translation. For this, we develop a measure of uncertainty designed specifically for long sequences of discrete random variables, corresponding to the words in the output sentence. This measure is able to convey epistemic uncertainty akin to the Mutual Information (MI), which is used in the case of single discrete random variables such as in classification. Our new measure of uncertainty solves a major intractability in the naive application of existing approaches on long sentences. We train a Transformer model with dropout on the task of GermanEnglish translation using WMT 13 and Europarl, and show that using dropout uncertainty our measure is able to identify when Dutch source sentences, sentences which use the same word types as German, are given to the model instead of German.

Tim Xiao, Aidan Gomez, Yarin Gal

Spotlight talk, Workshop on Bayesian Deep Learning, NeurIPS 2019

[Paper]