Back to all publications...

Learning CIFAR-10 with a Simple Entropy Estimator Using Information Bottleneck Objectives

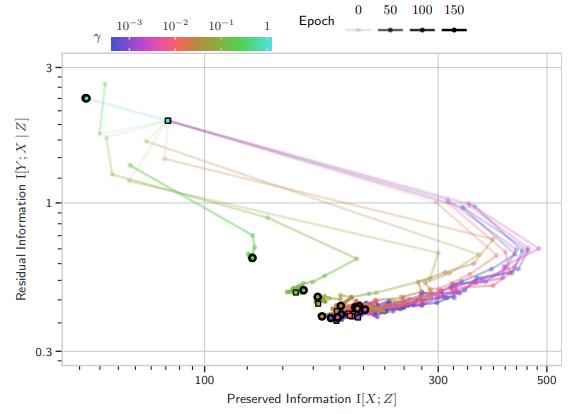

The Information Bottleneck (IB) principle characterizes learning and generalization in deep neural networks in terms of the change in two information theoretic quantities and leads to a regularized objective function for training neural networks. These quantities are difficult to compute directly for deep neural networks. We show that it is possible to backpropagate through a simple entropy estimator to obtain an IB training method that works for modern neural network architectures. We evaluate our approach empirically on the CIFAR-10 dataset, showing that IB objectives can yield competitive performance on this dataset with a conceptually simple approach while also performing well against adversarial attacks out-of-the-box.

Andreas Kirsch, Clare Lyle, Yarin Gal

Uncertainty & Robustness in Deep Learning Workshop, ICML, 2020

[Paper] [BibTex]