Back to all publications...

Modelling non-reinforced preferences using selective attention

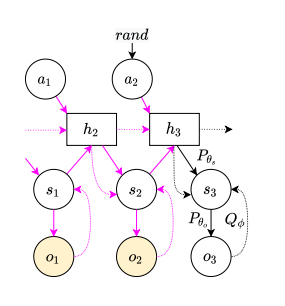

How can artificial agents learn non-reinforced preferences to continuously adapt their behaviour to a changing environment? We decompose this question into two challenges: (i) encoding diverse memories and (ii) selectively attending to these for preference formation. Our proposed non-reinforced preference learning mechanism using selective attention, Nore, addresses both by leveraging the agent’s world model to collect a diverse set of experiences which are interleaved with imagined roll-outs to encode memories. These memories are selectively attended to, using attention and gating blocks, to update agent’s preferences. We validate Nore in a modified OpenAI Gym FrozenLake environment (without any external signal) with and without volatility under a fixed model of the environment – and compare its behaviour to Pepper, a Hebbian preference learning mechanism. We demonstrate that Nore provides a straightforward framework to induce exploratory preferences in the absence of external signals.

Noor Sajid, Panagiotis Tigas, Zafeirios Fountas, Qinghai Guo, Alexey Zakharov, Lancelot Da Costa

Workshop Track - 1st Conference on Lifelong Learning Agents, 2022

arXiv

[paper]