Back to all publications...

Random Baselines for Simple Code Problems are Competitive with Code Evolution

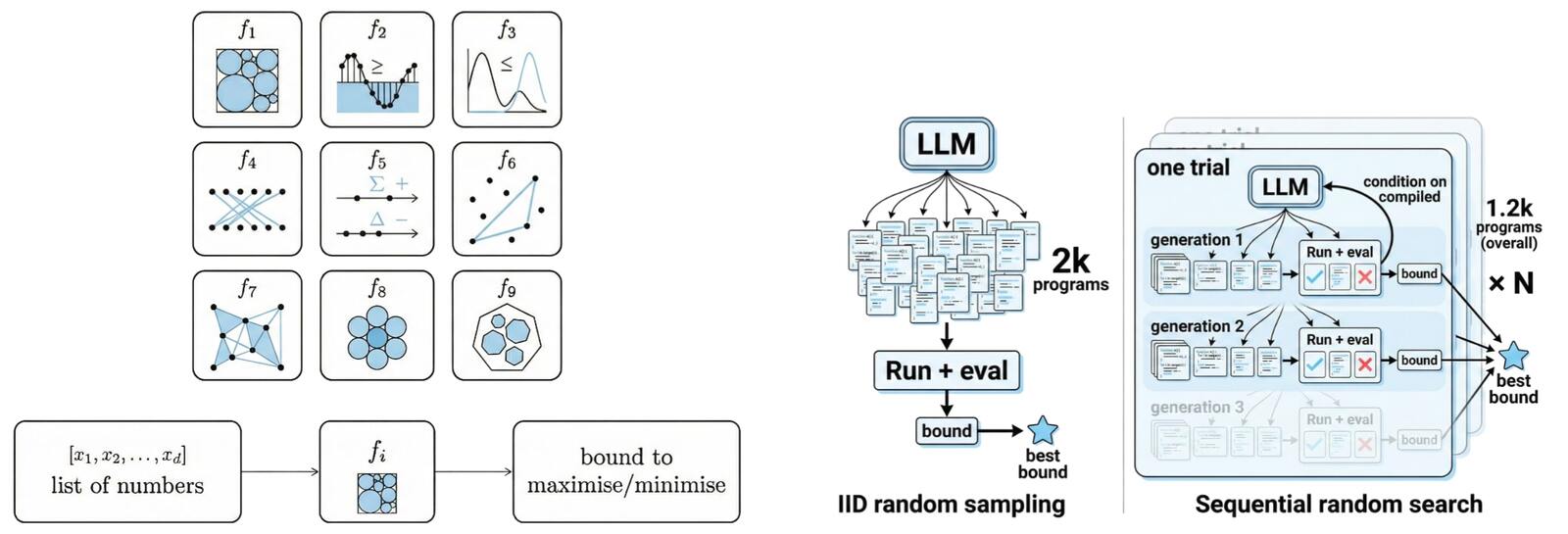

Sophisticated LLM-based search pipelines have been proposed for AI for scientific discovery, but is their complexity necessary? As a case study, we test how well two kinds of LLM-based random baselines – IID random sampling of programs and a sequential random search – do on nine problems from the AlphaEvolve paper, compared to both AlphaEvolve and a strong open-source baseline, ShinkaEvolve. We find that random search works well, with the sequential baseline matching AlphaEvolve on 4/9 problems and matching or improving over ShinkaEvolve, using similar resources, on 7/9. This implies that some improvements may stem not from the LLM-driven program search but from the manual formulation that makes the problems easily optimizable.

Yonatan Gideoni, Yujin Tang, Sebastian Risi, Yarin Gal

NeurIPS 2025 DL4C Workshop

[paper]