Back to all members...

Angus Nicolson

Associate Member (PhD) (2020—2024)

Angus was jointly supervised by Alison Noble and Yarin Gal within the EPSRC CDT in Health Data Science. He works on the application, characterisation and improvement of interpretable machine learning in healthcare, specifically ultrasound imaging. He grew up in Oxford so is one of the few who have had the fortune of seeing the city from both a town and gown perspective. He received an MSci degree from Durham in Natural Sciences, specialising in computational chemistry and quantum mechanics in his final year. After his degree, he ignited his interest in the application of machine learning to medical problems while working at Optellum, an Oxford based startup focused on the early diagnosis of lung cancer.

Publications while at OATML • News items mentioning Angus Nicolson • Reproducibility and Code • Blog Posts

Publications while at OATML:

TextCAVs: Debugging vision models using text

Concept-based interpretability methods are a popular form of explanation for deep learning models which provide explanations in the form of high-level human interpretable concepts. These methods typically find concept activation vectors (CAVs) using a probe dataset of concept examples. This requires labelled data for these concepts -- an expensive task in the medical domain. We introduce TextCAVs: a novel method which creates CAVs using vision-language models such as CLIP, allowing for explanations to be created solely using text descriptions of the concept, as opposed to image exemplars. This reduced cost in testing concepts allows for many concepts to be tested and for users to interact with the model, testing new ideas as they are thought of, rather than a delay caused by image collection and annotation. In early experimental results, we demonstrate that TextCAVs produces reasonable explanations for a chest x-ray dataset (MIMIC-CXR) and natural images (ImageNet), and that these ... [full abstract]

Angus Nicolson, Yarin Gal, Alison Noble

arXiv

[paper]

Explaining Explainability: Understanding Concept Activation Vectors

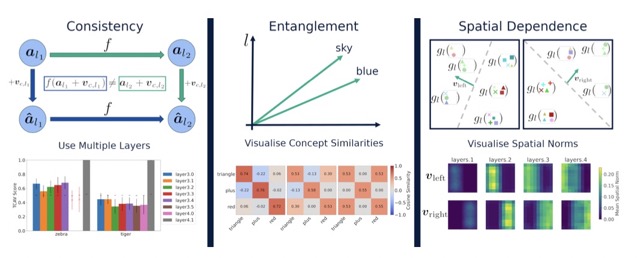

Recent interpretability methods propose using concept-based explanations to translate the internal representations of deep learning models into a language that humans are familiar with: concepts. This requires understanding which concepts are present in the representation space of a neural network. One popular method for finding concepts is Concept Activation Vectors (CAVs), which are learnt using a probe dataset of concept exemplars. In this work, we investigate three properties of CAVs. CAVs may be: (1) inconsistent between layers, (2) entangled with different concepts, and (3) spatially dependent. Each property provides both challenges and opportunities in interpreting models. We introduce tools designed to detect the presence of these properties, provide insight into how they affect the derived explanations, and provide recommendations to minimise their impact. Understanding these properties can be used to our advantage. For example, we introduce spatially dependent CAVs to tes... [full abstract]

Angus Nicolson, J. Alison Noble, Lisa Schut, Yarin Gal

arXiv

[paper]