Back to all members...

Yonatan Gideoni

PhD, started 2023

Yonatan is a DPhil student in the AIMS CDT, advised by Yarin Gal and Michael Bronstein. He is interested in improving models’ scaling and efficiency, believing that foundational applied research is the best way to do good. He did his master’s at Cambridge and holds a bachelor’s in physics from the Hebrew University of Jerusalem. Yonatan worked on a variety of projects such as machine learning for quantum computing at Qruise and Forschungszentrum Jülich, maps for autonomous vehicles at Mobileye, and as a teacher at the Israeli Arts and Sciences Academy. Before that, he was involved in mentoring and volunteering for robotics teams, reaching international competitions several times. He is funded by the AIMS CDT and a Rhodes scholarship.

Publications while at OATML • News items mentioning Yonatan Gideoni • Reproducibility and Code • Blog Posts

Publications while at OATML:

Random Baselines for Simple Code Problems are Competitive with Code Evolution

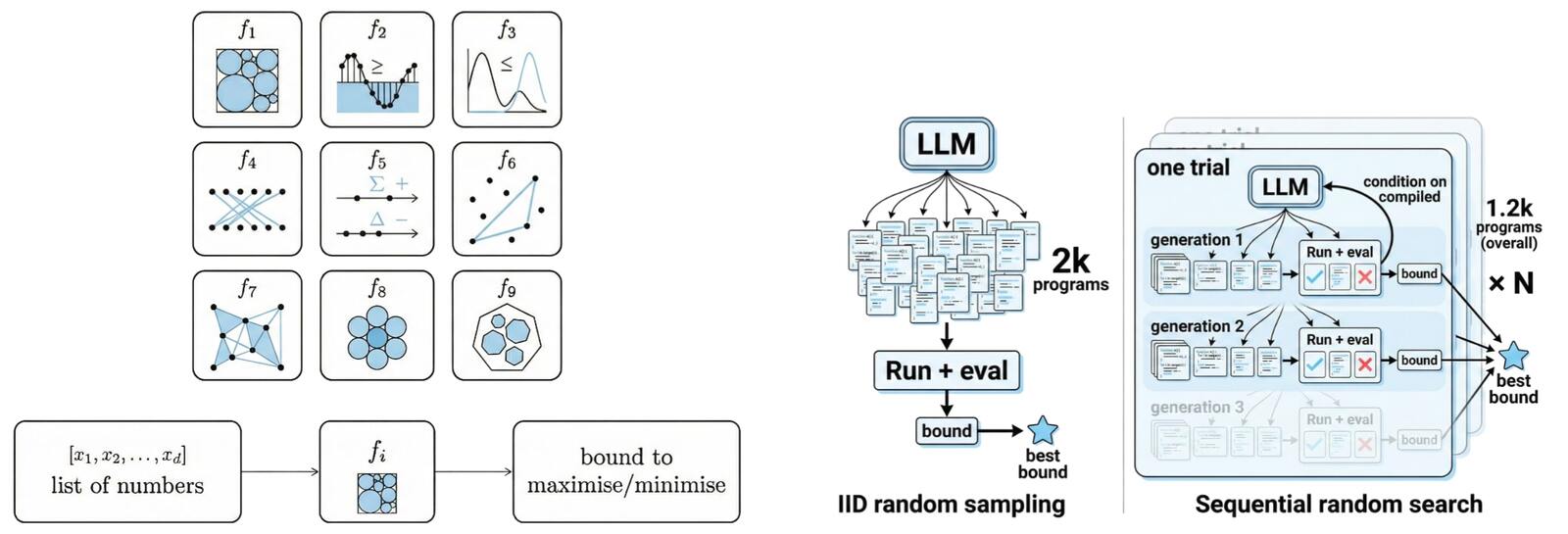

Sophisticated LLM-based search pipelines have been proposed for AI for scientific discovery, but is their complexity necessary? As a case study, we test how well two kinds of LLM-based random baselines – IID random sampling of programs and a sequential random search – do on nine problems from the AlphaEvolve paper, compared to both AlphaEvolve and a strong open-source baseline, ShinkaEvolve. We find that random search works well, with the sequential baseline matching AlphaEvolve on 4/9 problems and matching or improving over ShinkaEvolve, using similar resources, on 7/9. This implies that some improvements may stem not from the LLM-driven program search but from the manual formulation that makes the problems easily optimizable.

Yonatan Gideoni, Yujin Tang, Sebastian Risi, Yarin Gal

NeurIPS 2025 DL4C Workshop

[paper]