Back to all publications...

Resolving Causal Confusion in Reinforcement Learning via Robust Exploration

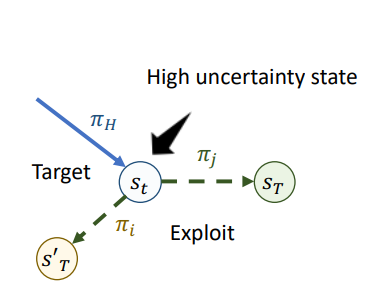

A reinforcement learning agent must distinguish between spurious correlations and causal relationships in its environment in order to robustly achieve its goals. Causal confusion has been defined and studied in various constrained settings, like imitation learning and the partial observability setting with latent confounders. We now show that causal confusion can also occur in online reinforcement learning (RL) settings. We formalize the problem of identifying causal structure in a Markov Decision Process and highlight the central role played by the data collection policy in identifying and avoiding spurious correlations. We find that under insufficient exploration, many RL algorithms, including those with PAC-MDP guarantees, fall prey to causal confusion under insufficient exploration policies. To address this, we present a robust exploration strategy which enables causal hypothesis-testing by interaction with the environment. Our method outperforms existing state-of-the-art approaches at avoiding causal confusion, improving robustness and generalization in a range of tasks.

Clare Lyle, Amy Zhang, Minqui Jiang, Joelle Pineau, Yarin Gal

Self-Supervision for Reinforcement Learning Workshop-ICLR 2021

[Paper]