Back to all members...

Lorenz Kuhn

PhD (2021—2024)

Lorenz was a DPhil student in Computer Science working with Prof. Yarin Gal at the University of Oxford. His main research interests include improving our theoretical understanding of deep learning, as well as making deep learning safer and more reliable for real-world use cases. Lorenz is a recipient of a FHI DPhil Scholarship and holds a MSc in Computer Science from ETH Zurich.

Previously, Lorenz has undertaken research on pruning and generalization in deep neural networks under the supervision of Prof. Yarin Gal and Prof. Andreas Krause, on medical recommendation systems with Prof. Ce Zhang, and on medical information retrieval with Prof. Carsten Eickhoff and Prof. Thomas Hofmann.

Additionally, Lorenz has worked on making very large language models more efficient at Cohere, on various data science projects at the Boston Consulting Group, and on medical recommendation systems at IBM Research and ETH Zurich.

Publications while at OATML • News items mentioning Lorenz Kuhn • Reproducibility and Code • Blog Posts

Publications while at OATML:

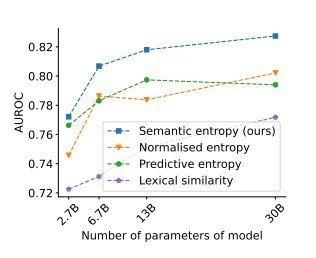

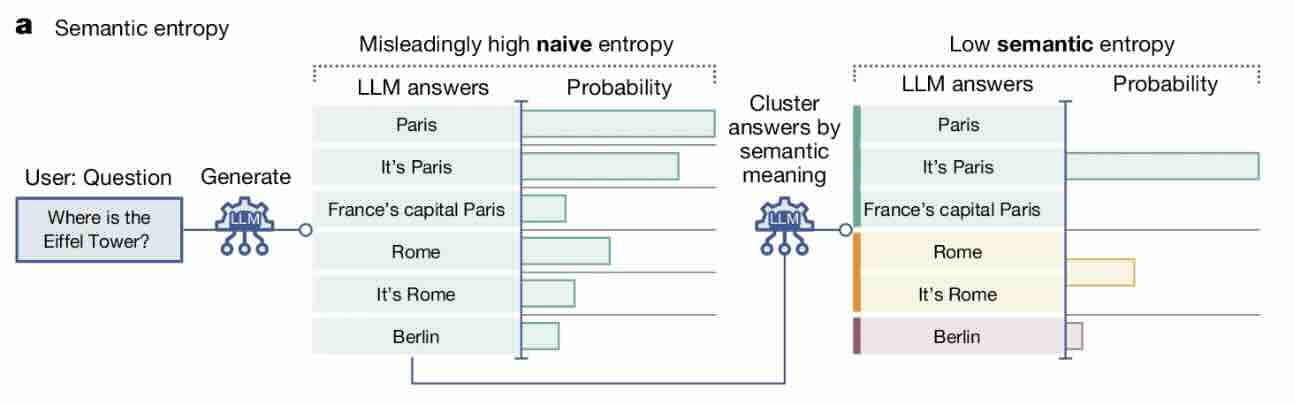

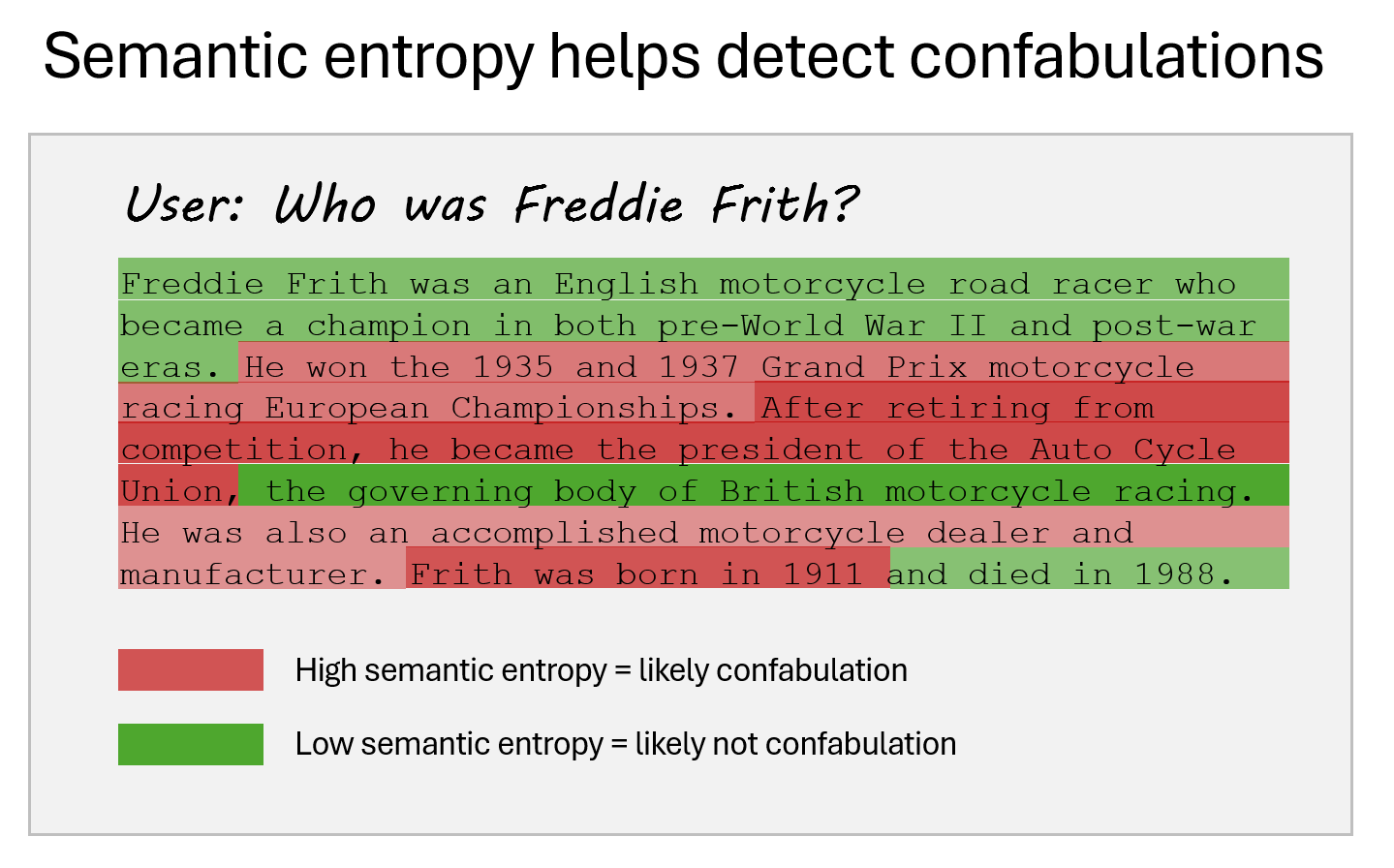

Detecting hallucinations in large language models using semantic entropy

Large language model (LLM) systems, such as ChatGPT or Gemini, can show impressive reasoning and question-answering capabilities but often ‘hallucinate’ false outputs and unsubstantiated answers. Answering unreliably or without the necessary information prevents adoption in diverse fields, with problems including fabrication of legal precedents or untrue facts in news articles and even posing a risk to human life in medical domains such as radiology. Encouraging truthfulness through supervision or reinforcement has only been partially successful. Researchers need a general method for detecting hallucinations in LLMs that works even with new and unseen questions to which humans might not know the answer. Here we develop new methods grounded in statistics, proposing entropy-based uncertainty estimators for LLMs to detect a subset of hallucinations—confabulations—which are arbitrary and incorrect generations. Our method addresses the fact that one idea can be expressed in many ways by... [full abstract]

Sebastian Farquhar, Jannik Kossen, Lorenz Kuhn, Yarin Gal

Nature

[paper]

Semantic Uncertainty; Linguistic Invariances for Uncertainty Estimation in Natural Language Generation

We introduce a method to measure uncertainty in large language models. For tasks like question answering, it is essential to know when we can trust the natural language outputs of foundation models. We show that measuring uncertainty in natural language is challenging because of "semantic equivalence" -- different sentences can mean the same thing. To overcome these challenges we introduce semantic entropy -- an entropy which incorporates linguistic invariances created by shared meanings. Our method is unsupervised, uses only a single model, and requires no modifications to off-the-shelf language models. In comprehensive ablation studies we show that the semantic entropy is more predictive of model accuracy on question answering data sets than comparable baselines.

Lorenz Kuhn, Yarin Gal, Sebastian Farquhar

arXiv

[paper]

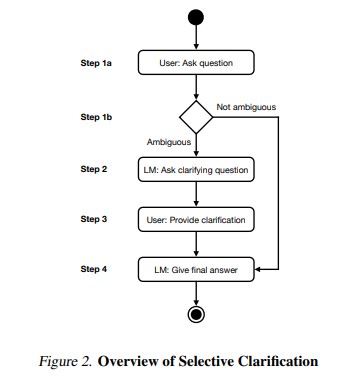

CLAM; Selective Clarification for Ambiguous Questions with Generative Language Models

Users often ask dialogue systems ambiguous questions that require clarification. We show that current language models rarely ask users to clarify ambiguous questions and instead provide incorrect answers. To address this, we introduce CLAM: a framework for getting language models to selectively ask for clarification about ambiguous user questions. In particular, we show that we can prompt language models to detect whether a given question is ambiguous, generate an appropriate clarifying question to ask the user, and give a final answer after receiving clarification. We also show that we can simulate users by providing language models with privileged information. This lets us automatically evaluate multi-turn clarification dialogues. Finally, CLAM significantly improves language models' accuracy on mixed ambiguous and unambiguous questions relative to SotA.

Lorenz Kuhn, Sebastian Farquhar, Yarin Gal

arXiv

[paper]

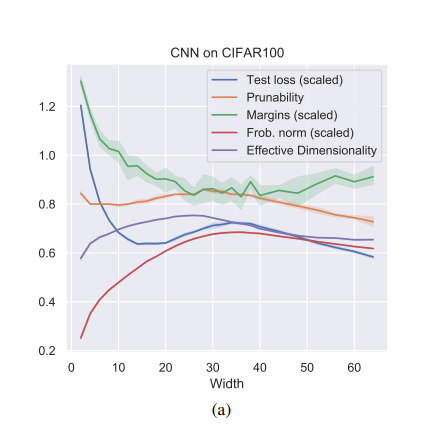

Robustness to Pruning Predicts Generalization in Deep Neural Networks

Existing generalization measures that aim to capture a model's simplicity based on parameter counts or norms fail to explain generalization in overparameterized deep neural networks. In this paper, we introduce a new, theoretically motivated measure of a network's simplicity which we call prunability: the smallest \emph{fraction} of the network's parameters that can be kept while pruning without adversely affecting its training loss. We show that this measure is highly predictive of a model's generalization performance across a large set of convolutional networks trained on CIFAR-10, does not grow with network size unlike existing pruning-based measures, and exhibits high correlation with test set loss even in a particularly challenging double descent setting. Lastly, we show that the success of prunability cannot be explained by its relation to known complexity measures based on models' margin, flatness of minima and optimization speed, finding that our new measure is similar to -... [full abstract]

Lorenz Kuhn, Clare Lyle, Aidan Gomez, Jonas Rothfuss, Yarin Gal

arXiv

[paper]

News items mentioning Lorenz Kuhn:

Group work on detecting hallucinations in LLMs published in Nature

19 Jun 2024

OATML group members Sebastian Farquhar, Jannik Kossen and Yarin Gal, along with group alumni Lorenz Kuhn, published a study in Nature which introduces a breakthrough method for detecting hallucinations in LLMs using semantic entropy. You can read the paper here.

Reproducibility and Code

Detecting hallucinations in large language models using semantic entropy

This is the code repository for the paper “Detecting hallucinations in large language models using semantic entropy”.

CodeSebastian Farquhar, Jannik Kossen, Lorenz Kuhn, Yarin Gal

Blog Posts

Detecting hallucinations in large language models using semantic entropy

Can we detect confabulations, where LLMs invent plausible-sounding factoids? We show how, in research published at Nature. …

Full post...Sebastian Farquhar, Jannik Kossen, Lorenz Kuhn, Yarin Gal, 19 Jun 2024

OATML Conference papers at NeurIPS 2022

OATML group members and collaborators are proud to present 8 papers at NeurIPS 2022 main conference, and 11 workshop papers. …

Full post...Yarin Gal, Freddie Kalaitzis, Shreshth Malik, Lorenz Kuhn, Gunshi Gupta, Jannik Kossen, Pascal Notin, Andrew Jesson, Panagiotis Tigas, Tim G. J. Rudner, Sebastian Farquhar, Ilia Shumailov, 25 Nov 2022