Back to all members...

Luckeciano Carvalho Melo

PhD, started 2023

Luckeciano is a DPhil student in the OATML group, supervised by Yarin Gal and Alessandro Abate. His research interests lie in designing agents that learn behaviors through interactions in an efficient, generalist, safe,

and adaptive way. He believes that such agents emerge from three main pillars: semantically rich representations of entities

in the world; self-supervised World Models with inductive biases for memory and counterfactual reasoning; and fast policy

adaptation mechanisms for out-of-distribution generalization.

Previously, he worked as an Applied Scientist at Microsoft, working with multi-modal representation learning and large language models for web data semantic understanding. He also led the RL Research group at the Center of Excellence in AI in Brazil, working with real-world RL applications in scalable digital platforms with industry partners.

Publications while at OATML • News items mentioning Luckeciano Carvalho Melo • Reproducibility and Code • Blog Posts

Publications while at OATML:

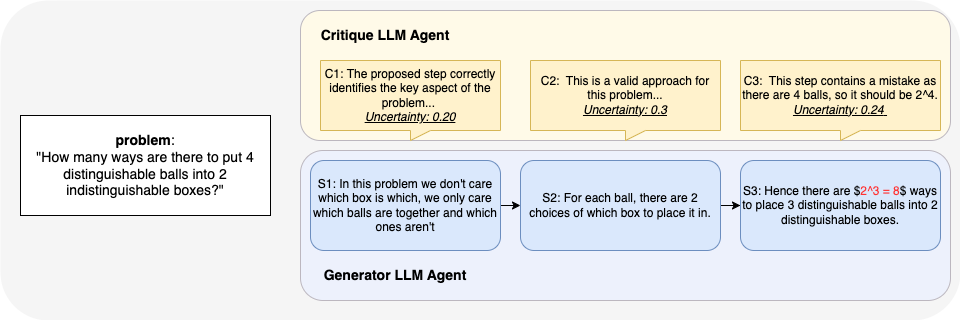

Uncertainty-Aware Step-wise Verification with Generative Reward Models

Complex multi-step reasoning tasks, such as solving mathematical problems, remain challenging for large language models (LLMs). While outcome supervision is commonly used, process supervision via process reward models (PRMs) provides intermediate rewards to verify step-wise correctness in solution traces. However, as proxies for human judgement, PRMs suffer from reliability issues, including susceptibility to reward hacking. In this work, we propose leveraging uncertainty quantification (UQ) to enhance the reliability of step-wise verification with generative reward models for mathematical reasoning tasks. We introduce CoT Entropy, a novel UQ method that outperforms existing approaches in quantifying a PRM's uncertainty in step-wise verification. Our results demonstrate that incorporating uncertainty estimates improves the robustness of judge-LM PRMs, leading to more reliable verification.

Daniella (Zihuiwen) Ye, Luckeciano Carvalho Melo, Younesse Kaddar, Phil Blunsom, Sam Staton, Yarin Gal

arXiv

[paper]

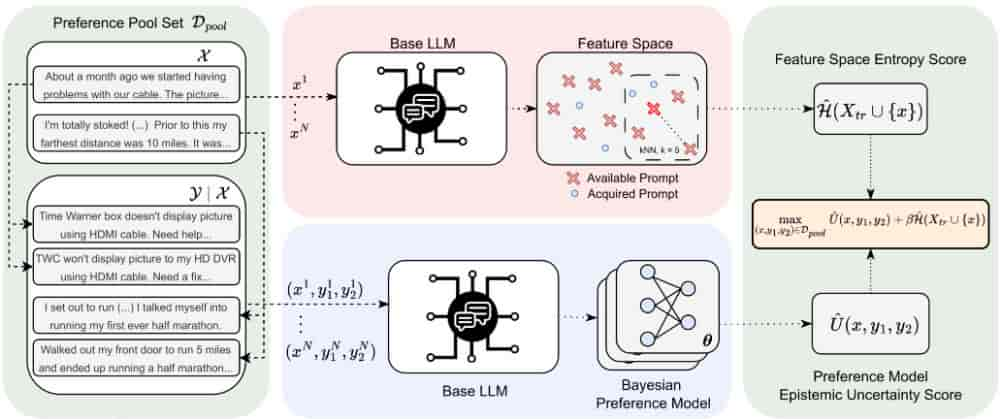

Deep Bayesian Active Learning for Preference Modeling in Large Language Models

Leveraging human preferences for steering the behavior of Large Language Models (LLMs) has demonstrated notable success in recent years. Nonetheless, data selection and labeling are still a bottleneck for these systems, particularly at large scale. Hence, selecting the most informative points for acquiring human feedback may considerably reduce the cost of preference labeling and unleash the further development of LLMs. Bayesian Active Learning provides a principled framework for addressing this challenge and has demonstrated remarkable success in diverse settings. However, previous attempts to employ it for Preference Modeling did not meet such expectations. In this work, we identify that naive epistemic uncertainty estimation leads to the acquisition of redundant samples. We address this by proposing the Bayesian Active Learner for Preference Modeling (BAL-PM), a novel stochastic acquisition policy that not only targets points of high epistemic uncertainty according to the prefer... [full abstract]

Luckeciano Carvalho Melo, Panagiotis Tigas, Alessandro Abate, Yarin Gal

38th Conference on Neural Information Processing Systems (NeurIPS 2024)

[paper]

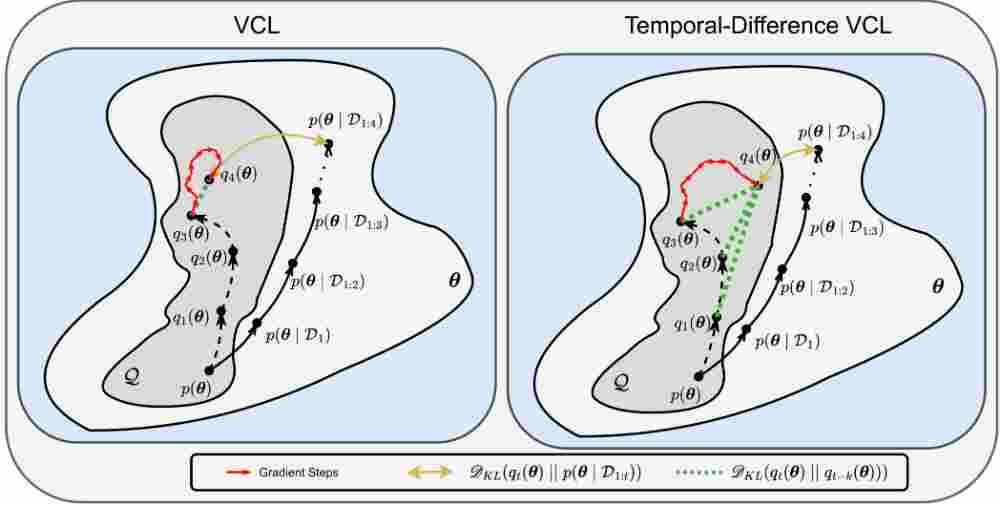

Temporal-Difference Variational Continual Learning

A crucial capability of Machine Learning models in real-world applications is the ability to continuously learn new tasks. This adaptability allows them to respond to potentially inevitable shifts in the data-generating distribution over time. However, in Continual Learning (CL) settings, models often struggle to balance learning new tasks (plasticity) with retaining previous knowledge (memory stability). Consequently, they are susceptible to Catastrophic Forgetting, which degrades performance and undermines the reliability of deployed systems. Variational Continual Learning methods tackle this challenge by employing a learning objective that recursively updates the posterior distribution and enforces it to stay close to the latest posterior estimate. Nonetheless, we argue that these methods may be ineffective due to compounding approximation errors over successive recursions. To mitigate this, we propose new learning objectives that integrate the regularization effects of multiple... [full abstract]

Luckeciano Carvalho Melo, Alessandro Abate, Yarin Gal

arXiv

[paper]

Blog Posts

OATML conference papers at NeurIPS 2025

OATML group members and collaborators are proud to present 8 papers at NeurIPS 2025. …

Full post...Yarin Gal, Lukas Aichberger, Jannik Kossen, Luckeciano Carvalho Melo, Gunshi Gupta, Muhammed Razzak, Freddie Bickford Smith, Xander Davies, Hazel Kim, 19 Sep 2025