Back to all members...

Lisa Schut

PhD, started 2020

Lisa Schut is a PhD student in the OATML group, supervised by Yarin Gal. Her main interests are centered around making machine learning algorithms more robust and interpretable. Lisa holds two master’s degrees, in Statistics and Computer Science, from the University of Oxford. Lisa visited the CoCoSci lab, led by prof. Griffiths, and has spent time interning at Google Brain, with Been Kim and DeepMind, with Ulrich Paquet. Previously, Lisa was a member of the Dutch women’s Olympic chess team. Lisa is jointly funded by the EPSRC and DeepMind.

Publications while at OATML • News items mentioning Lisa Schut • Reproducibility and Code • Blog Posts

Publications while at OATML:

Bridging the Human–AI Knowledge Gap through Concept Discovery and Transfer in AlphaZero

AI systems have attained superhuman performance across various domains. If the hidden knowledge encoded in these highly capable systems can be leveraged, human knowledge and performance can be advanced. Yet, this internal knowledge is difficult to extract. Due to the vast space of possible internal representations, searching for meaningful new conceptual knowledge can be like finding a needle in a haystack. Here, we introduce a method that extracts new chess concepts from AlphaZero, an AI system that mastered chess via self-play without human supervision. Our method excavates vectors that represent concepts from AlphaZero’s internal representations using convex optimization, and filters the concepts based on teachability (whether the concept is transferable to another AI agent) and novelty (whether the concept contains information not present in human chess games). These steps ensure that the discovered concepts are useful and meaningful. For the resulting set of concepts, prototyp... [full abstract]

Lisa Schut, Nenad Tomasev, Tom McGrath, Demis Hassabis, Ulrich Paquet, Been Kim

PNAS (2025)

[paper]

Do Multilingual LLMs Think In English?

Large language models (LLMs) have multilingual capabilities and can solve tasks across various languages. However, we show that current LLMs make key decisions in a representation space closest to English, regardless of their input and output languages. Exploring the internal representations with a logit lens for sentences in French, German, Dutch, and Mandarin, we show that the LLM first emits representations close to English for semantically-loaded words before translating them into the target language. We further show that activation steering in these LLMs is more effective when the steering vectors are computed in English rather than in the language of the inputs and outputs. This suggests that multilingual LLMs perform key reasoning steps in a representation that is heavily shaped by English in a way that is not transparent to system users.

Lisa Schut, Yarin Gal, Sebastian Farquhar

arXiv

[paper]

Semantic Entropy Probes: Robust and Cheap Hallucination Detection in LLMs

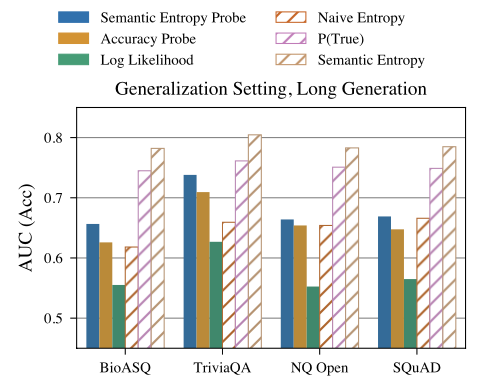

We propose semantic entropy probes (SEPs), a cheap and reliable method for uncertainty quantification in Large Language Models (LLMs). Hallucinations, which are plausible-sounding but factually incorrect and arbitrary model generations, present a major challenge to the practical adoption of LLMs. Recent work by Farquhar et al. (2024) proposes semantic entropy (SE), which can detect hallucinations by estimating uncertainty in the space semantic meaning for a set of model generations. However, the 5-to-10-fold increase in computation cost associated with SE computation hinders practical adoption. To address this, we propose SEPs, which directly approximate SE from the hidden states of a single generation. SEPs are simple to train and do not require sampling multiple model generations at test time, reducing the overhead of semantic uncertainty quantification to almost zero. We show that SEPs retain high performance for hallucination detection and generalize better to out-of-distributi... [full abstract]

Jannik Kossen, Jiatong Han, Muhammed Razzak, Lisa Schut, Shreshth Malik, Yarin Gal

ICML Workshop on Foundation Models in the Wild, 2024

[OpenReview] [arXiv]

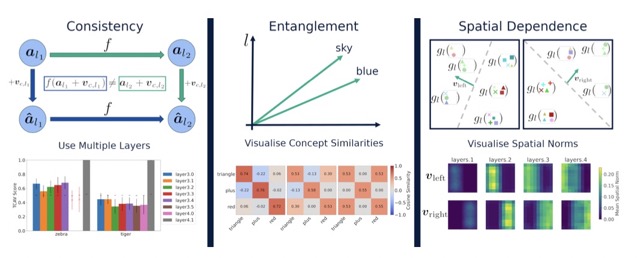

Explaining Explainability: Understanding Concept Activation Vectors

Recent interpretability methods propose using concept-based explanations to translate the internal representations of deep learning models into a language that humans are familiar with: concepts. This requires understanding which concepts are present in the representation space of a neural network. One popular method for finding concepts is Concept Activation Vectors (CAVs), which are learnt using a probe dataset of concept exemplars. In this work, we investigate three properties of CAVs. CAVs may be: (1) inconsistent between layers, (2) entangled with different concepts, and (3) spatially dependent. Each property provides both challenges and opportunities in interpreting models. We introduce tools designed to detect the presence of these properties, provide insight into how they affect the derived explanations, and provide recommendations to minimise their impact. Understanding these properties can be used to our advantage. For example, we introduce spatially dependent CAVs to tes... [full abstract]

Angus Nicolson, J. Alison Noble, Lisa Schut, Yarin Gal

arXiv

[paper]

Diversifying AI - Towards Creative Chess with AlphaZero

In recent years, Artificial Intelligence (AI) systems have surpassed human intelligence in a variety of computational tasks. However, AI systems, like humans, make mistakes, have blind spots, hallucinate, and struggle to generalize to new situations. This work explores whether AI can benefit from creative decision-making mechanisms when pushed to the limits of its computational rationality. In particular, we investigate whether a team of diverse AI systems can outperform a single AI in challenging tasks by generating more ideas as a group and then selecting the best ones. We study this question in the game of chess, the so-called “drosophila of AI”. We build on AlphaZero (AZ) and extend it to represent a league of agents via a latent-conditioned architecture, which we call AZdb. We train AZdb to generate a wider range of ideas using behavioral diversity techniques and select the most promising ones with sub-additive planning. Our experiments suggest that AZdb plays chess in diverse... [full abstract]

Tom Zahavy, Vivek Veeriah, Shaobo Hou, Kevin Waugh, Matthew Lai, Edouard Leurent, Nenad Tomasev, Lisa Schut, Demis Hassabis, Satinder Singh

arXiv pre-print (2023)

[paper]

Speedy Performance Estimation for Neural Architecture Search

Reliable yet efficient evaluation of generalisation performance of a proposed architecture is crucial to the success of neural architecture search (NAS). Traditional approaches face a variety of limitations: training each architecture to completion is prohibitively expensive, early stopped validation accuracy may correlate poorly with fully trained performance, and model-based estimators require large training sets. We instead propose to estimate the final test performance based on a simple measure of training speed. Our estimator is theoretically motivated by the connection between generalisation and training speed, and is also inspired by the reformulation of a PAC-Bayes bound under the Bayesian setting. Our model-free estimator is simple, efficient, and cheap to implement, and does not require hyperparameter-tuning or surrogate training before deployment. We demonstrate on various NAS search spaces that our estimator consistently outperforms other alternatives in achieving bette... [full abstract]

Binxin (Robin) Ru, Clare Lyle, Lisa Schut, Miroslav Fil, Mark van der Wilk, Yarin Gal

NeurIPS 2021

[Paper]

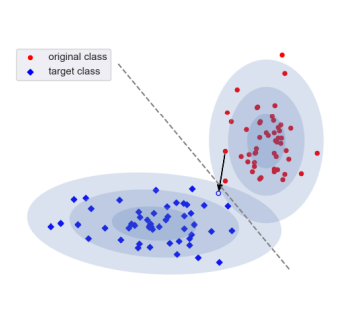

DeDUCE; Generating Counterfactual Explanations At Scale

When an image classifier outputs a wrong class label, it can be helpful to see what changes in the image would lead to a correct classification. This is the aim of algorithms generating counterfactual explanations. However, there is no easily scalable method to generate such counterfactuals. We develop a new algorithm providing counterfactual explanations for large image classifiers trained with spectral normalisation at low computational cost. We empirically compare this algorithm against baselines from the literature; our novel algorithm consistently finds counterfactuals that are much closer to the original inputs. At the same time, the realism of these counterfactuals is comparable to the baselines.

Benedikt Höltgen, Lisa Schut, Jan Brauner, Yarin Gal

Open Review (19 Dec 2021)

[Paper]

Generating Interpretable Counterfactual Explanations By Implicit Minimisation of Epistemic and Aleatoric Uncertainties

Counterfactual explanations (CEs) are a practical tool for demonstrating why machine learning classifiers make particular decisions. For CEs to be useful, it is important that they are easy for users to interpret. Existing methods for generating interpretable CEs rely on auxiliary generative models, which may not be suitable for complex datasets, and incur engineering overhead. We introduce a simple and fast method for generating interpretable CEs in a white-box setting without an auxiliary model, by using the predictive uncertainty of the classifier. Our experiments show that our proposed algorithm generates more interpretable CEs, according to IM1 scores, than existing methods. Additionally, our approach allows us to estimate the uncertainty of a CE, which may be important in safety-critical applications, such as those in the medical domain.

Lisa Schut, Oscar Key, Rory McGrath, Luca Costabello, Bogdan Sacaleanu, Medb Corcoran, Yarin Gal

AISTATS, 2021

[Paper] [Code]

A Bayesian Perspective on Training Speed and Model Selection

We take a Bayesian perspective to illustrate a connection between training speed and the marginal likelihood in linear models. This provides two major insights: first, that a measure of a model's training speed can be used to estimate its marginal likelihood. Second, that this measure, under certain conditions, predicts the relative weighting of models in linear model combinations trained to minimize a regression loss. We verify our results in model selection tasks for linear models and for the infinite-width limit of deep neural networks. We further provide encouraging empirical evidence that the intuition developed in these settings also holds for deep neural networks trained with stochastic gradient descent. Our results suggest a promising new direction towards explaining why neural networks trained with stochastic gradient descent are biased towards functions that generalize well.

Clare Lyle, Lisa Schut, Binxin (Robin) Ru, Yarin Gal, Mark van der Wilk

NeurIPS, 2020

[Paper] [Code] [BibTex]

Uncertainty-Aware Counterfactual Explanations for Medical Diagnosis

While deep learning algorithms can excel at predicting outcomes, they often act as black-boxes rendering them uninterpretable for healthcare practitioners. Counterfactual explanations (CEs) are a practical tool for demonstrating why machine learning models make particular decisions. We introduce a novel algorithm that leverages uncertainty to generate trustworthy counterfactual explanations for white-box models. Our proposed method can generate more interpretable CEs than the current benchmark (Van Looveren and Klaise, 2019) for breast cancer diagnosis. Further, our approach provides confidence levels for both the diagnosis as well as the explanation.

Lisa Schut, Oscar Key, Rory McGrath, Luca Costabello, Bogdan Sacaleanu, Medb Corcoran, Yarin Gal

ML4H: Machine Learning for Health Workshop NeurIPS, 2020

[Paper] [BibTex]

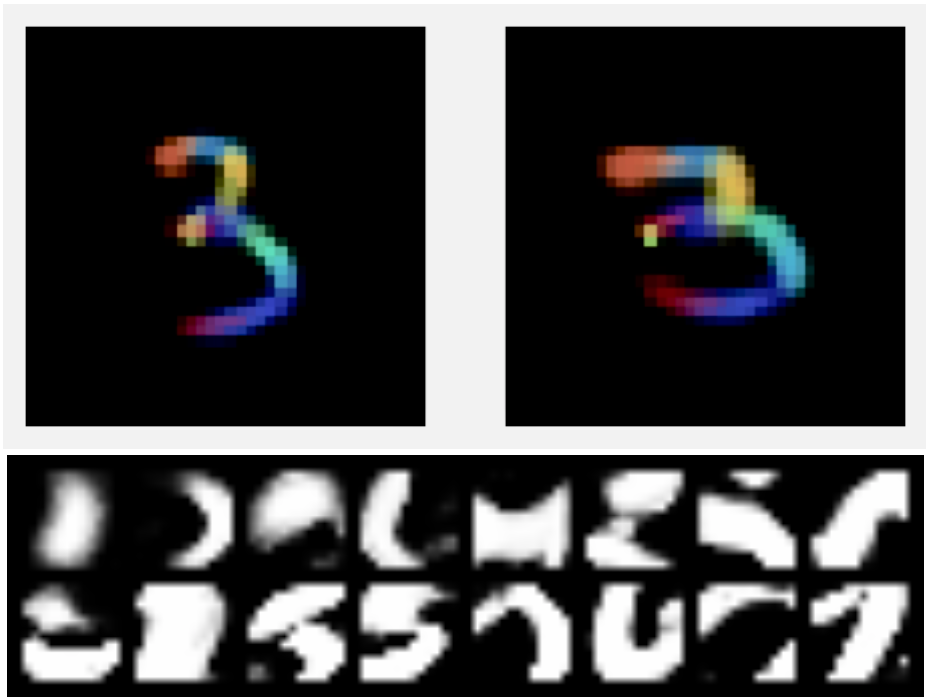

Capsule Networks: A Generative Probabilistic Perspective

'Capsule' models try to explicitly represent the poses of objects, enforcing a linear relationship between an objects pose and those of its constituent parts. This modelling assumption should lead to robustness to viewpoint changes since the object-component relationships are invariant to the poses of the object. We describe a probabilistic generative model that encodes these assumptions. Our probabilistic formulation separates the generative assumptions of the model from the inference scheme, which we derive from a variational bound. We experimentally demonstrate the applicability of our unified objective, and the use of test time optimisation to solve problems inherent to amortised inference.

Lewis Smith, Lisa Schut, Yarin Gal, Mark van der Wilk

Object Oriented Learning Workshop, ICML 2020

[Paper]

News items mentioning Lisa Schut:

Lisa Schut contributes to Humanity's Last Exam

24 Jan 2025

Lisa Schut contributed to Humanity’s Last Exam a multi-modal benchmark at the frontier of human knowledge, designed to be the final closed-ended academic benchmark of its kind with broad subject coverage. HLE consists of 2,700 questions across dozens of subjects, including mathematics, humanities, and the natural sciences. HLE is developed globally by subject-matter experts and consists of multiple-choice and short-answer questions suitable for automated grading.

Lisa Schut gives talk at the Stanford HAI Fall Conference

24 Oct 2023

Together with Been Kim, Lisa Schut gave a talk at the Stanford HAI Fall Conference on New Horizons in Generative AI: Science, Creativity, and Society on ‘Leveraging AlphaZero to Improve our Understanding & Creativity in Chess’.

NeurIPS 2021

11 Oct 2021

Thirteen papers with OATML members accepted to NeurIPS 2021 main conference. More information in our blog post.

ICML 2021

17 Jul 2021

Seven papers with OATML members accepted to ICML 2021, together with 14 workshop papers. More information in our blog post.

OATML student presents at Accenture Turing Innovation Symposium

02 Oct 2020

OATML graduate student Lisa Schut presented alongside Rory McGrath, from Accenture Labs, on “Counterfactual Explanations: Making AI decision-making more useful and trustworthy.” The research presented was joint work with Oscar Key and Professor Yarin Gal, in collaboration with Accenture Labs.

Blog Posts

13 OATML Conference papers at NeurIPS 2021

OATML group members and collaborators are proud to present 13 papers at NeurIPS 2021 main conference. …

Full post...Jannik Kossen, Neil Band, Aidan Gomez, Clare Lyle, Tim G. J. Rudner, Yarin Gal, Binxin (Robin) Ru, Clare Lyle, Lisa Schut, Atılım Güneş Baydin, Tim G. J. Rudner, Andrew Jesson, Panagiotis Tigas, Joost van Amersfoort, Andreas Kirsch, Pascal Notin, Angelos Filos, 11 Oct 2021

21 OATML Conference and Workshop papers at ICML 2021

OATML group members and collaborators are proud to present 21 papers at ICML 2021, including 7 papers at the main conference and 14 papers at various workshops. Group members will also be giving invited talks and participate in panel discussions at the workshops. …

Full post...Angelos Filos, Clare Lyle, Jannik Kossen, Sebastian Farquhar, Tom Rainforth, Andrew Jesson, Sören Mindermann, Tim G. J. Rudner, Oscar Key, Binxin (Robin) Ru, Pascal Notin, Panagiotis Tigas, Andreas Kirsch, Jishnu Mukhoti, Joost van Amersfoort, Lisa Schut, Muhammed Razzak, Aidan Gomez, Jan Brauner, Yarin Gal, 17 Jul 2021

22 OATML Conference and Workshop papers at NeurIPS 2020

OATML group members and collaborators are proud to be presenting 22 papers at NeurIPS 2020. Group members are also co-organising various events around NeurIPS, including workshops, the NeurIPS Meet-Up on Bayesian Deep Learning and socials. …

Full post...Muhammed Razzak, Panagiotis Tigas, Angelos Filos, Atılım Güneş Baydin, Andrew Jesson, Andreas Kirsch, Clare Lyle, Freddie Kalaitzis, Jan Brauner, Jishnu Mukhoti, Lewis Smith, Lisa Schut, Mizu Nishikawa-Toomey, Oscar Key, Binxin (Robin) Ru, Sebastian Farquhar, Sören Mindermann, Tim G. J. Rudner, Yarin Gal, 04 Dec 2020

13 OATML Conference and Workshop papers at ICML 2020

We are glad to share the following 13 papers by OATML authors and collaborators to be presented at this ICML conference and workshops …

Full post...Angelos Filos, Sebastian Farquhar, Tim G. J. Rudner, Lewis Smith, Lisa Schut, Tom Rainforth, Panagiotis Tigas, Pascal Notin, Andreas Kirsch, Clare Lyle, Joost van Amersfoort, Jishnu Mukhoti, Yarin Gal, 10 Jul 2020