Autonomous Driving — Publications

Shifts: A Dataset of Real Distributional Shift Across Multiple Large-Scale Tasks

There has been significant research done on developing methods for improving robustness to distributional shift and uncertainty estimation. In contrast, only limited work has examined developing standard datasets and benchmarks for assessing these approaches. Additionally, most work on uncertainty estimation and robustness has developed new techniques based on small-scale regression or image classification tasks. However, many tasks of practical interest have different modalities, such as tabular data, audio, text, or sensor data, which offer significant challenges involving regression and discrete or continuous structured prediction. Thus, given the current state of the field, a standardized large-scale dataset of tasks across a range of modalities affected by distributional shifts is necessary. This will enable researchers to meaningfully evaluate the plethora of recently developed uncertainty quantification methods, as well as assessment criteria and state-of-the-art baselin... [full abstract]

Andrey Malinin, Neil Band, Alexander Ganshin, German Chesnokov, Yarin Gal, Mark J. F. Gales, Alexey Noskov, Andrey Ploskonosov, Liudmila Prokhorenkova, Ivan Provilkov, Vatsal Raina, Vyas Raina, Denis Roginskiy, Mariya Shmatova, Panagiotis Tigas, Boris Yangel

NeurIPS Datasets and Benchmarks Track, 2021

[arXiv] [BibTex] [Code]

[Competition Website] [Blog Post (OATML)] [Blog Post (Yandex Research)]

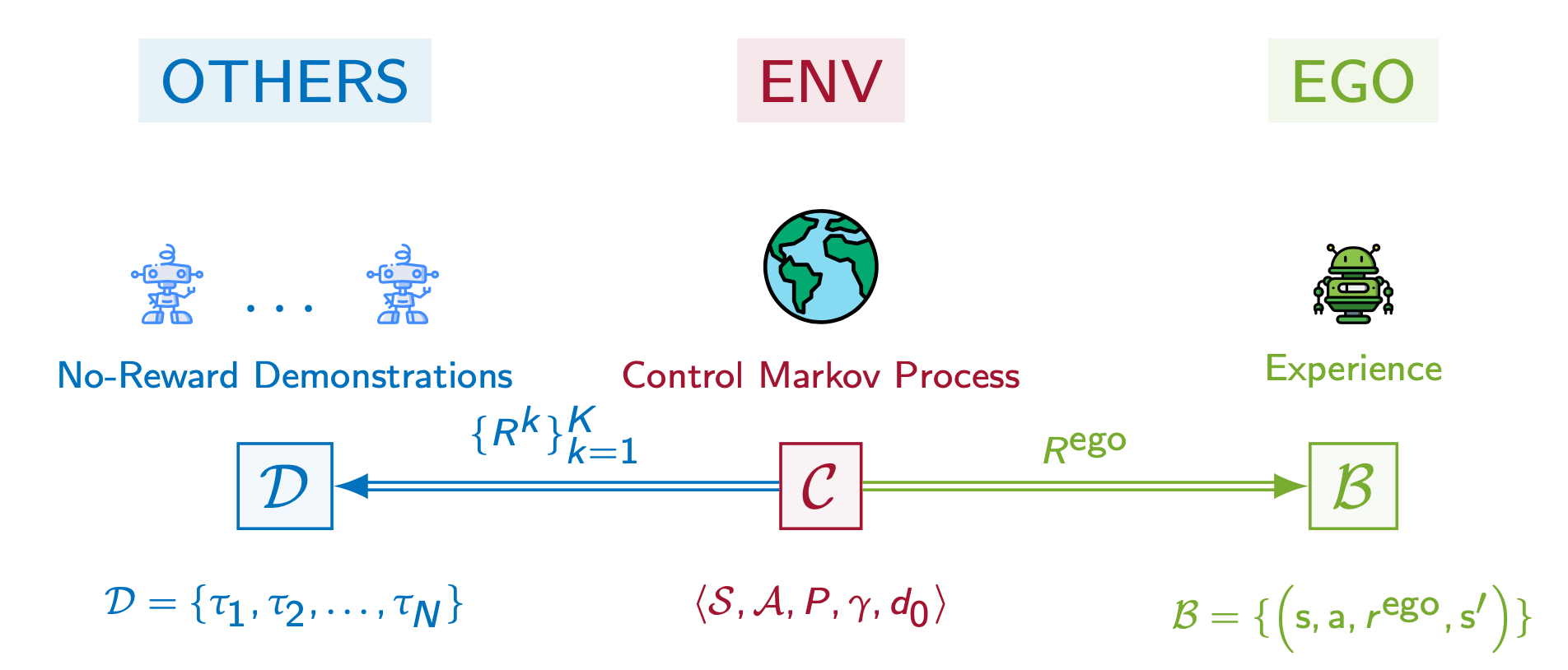

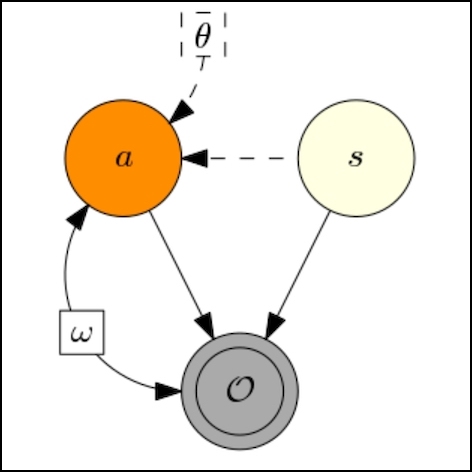

PsiPhi-Learning: Reinforcement Learning with Demonstrations using Successor Features and Inverse Temporal Difference Learning

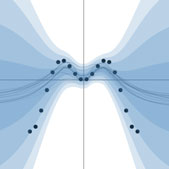

We study reinforcement learning (RL) with no-reward demonstrations, a setting in which an RL agent has access to additional data from the interaction of other agents with the same environment. However, it has no access to the rewards or goals of these agents, and their objectives and levels of expertise may vary widely. These assumptions are common in multi-agent settings, such as autonomous driving. To effectively use this data, we turn to the framework of successor features. This allows us to disentangle shared features and dynamics of the environment from agent-specific rewards and policies. We propose a multi-task inverse reinforcement learning (IRL) algorithm, called inverse temporal difference learning (ITD), that learns shared state features, alongside per-agent successor features and preference vectors, purely from demonstrations without reward labels. We further show how to seamlessly integrate ITD with learning from online environment interactions, arriving at... [full abstract]

Angelos Filos, Clare Lyle, Yarin Gal, Sergey Levine, Natasha Jaques, Gregory Farquhar

ICML, 2021 (long talk)

[Paper]

Invariant Representations for Reinforcement Learning without Reconstruction

We study how representation learning can accelerate reinforcement learning from rich observations, such as images, without relying either on domain knowledge or pixel-reconstruction. Our goal is to learn representations that provide for effective downstream control and invariance to task-irrelevant details. Bisimulation metrics quantify behavioral similarity between states in continuous MDPs, which we propose using to learn robust latent representations which encode only the task-relevant information from observations. Our method trains encoders such that distances in latent space equal bisimulation distances in state space. We demonstrate the effectiveness of our method at disregarding task-irrelevant information using modified visual MuJoCo tasks, where the background is replaced with moving distractors and natural videos, while achieving SOTA performance. We also test a first-person highway driving task where our method learns invariance to clouds, weather, and time of day. F... [full abstract]

Amy Zhang, Rowan McAllister, Roberto Calandra, Yarin Gal, Sergey Levine

ICLR, 2021 (Oral)

[Paper]

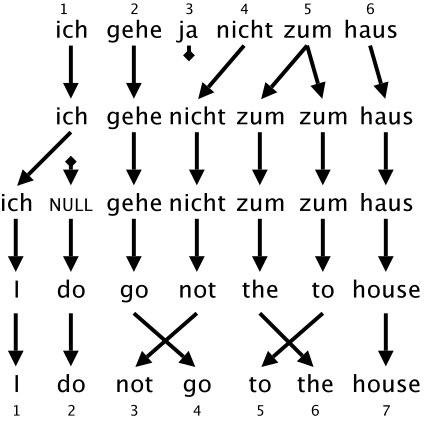

Real2sim: Automatic Generation of Open Street Map Towns For Autonomous Driving Benchmarks

Research in machine learning for autonomous driving (AD) is a constantly evolving field as researchers strive to build a Level 5 autonomous driving system. However, current benchmarks for such learning algorithms do not satisfactorily allow researchers to evaluate and compare performance across safety-critical metrics such as generalizability, out-of-distribution performance, etc. Reasons for this include the expensive nature of data collection from the real-world for autonomous driving and the limitations of software tools currently available for autonomous driving simulators. We develop a pipeline that allows for automatic generation of new town maps for simulator environments from OpenStreetMap [Haklay and Weber, 2008]. We demonstrate that our pipeline is capable of generating towns that, when perceived via LiDAR , share similar footprint to real-world gathered datasets like NuScenes [Caesar et al., 2020]. Additionally, we learn a realistic noise augmentation via Conditional ... [full abstract]

Avishek Mondal, Panagiotis Tigas, Yarin Gal

Machine Learning for Autonomous Driving Workshop at the 34th Conference on Neural Information Processing Systems (NeurIPS 2020), Vancouver, Canada. [Paper]

Can Autonomous Vehicles Identify, Recover From, and Adapt to Distribution Shifts?

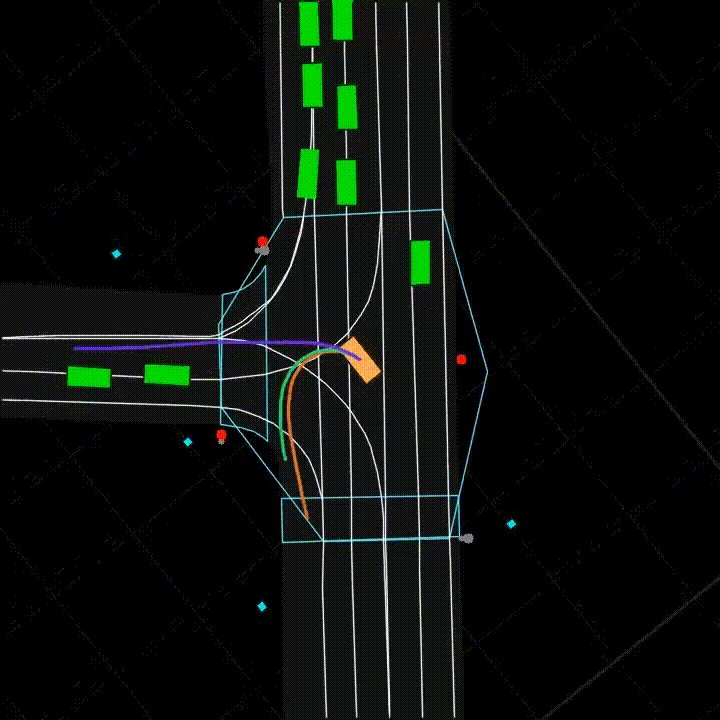

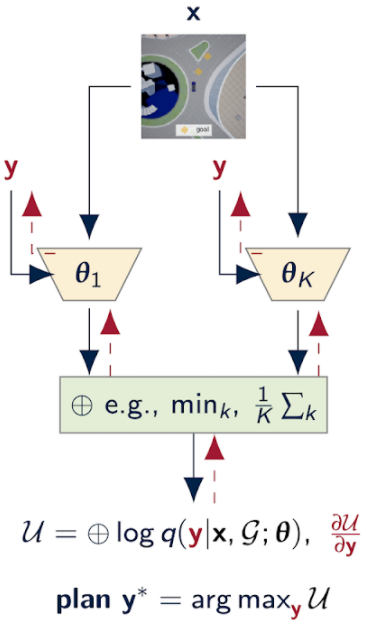

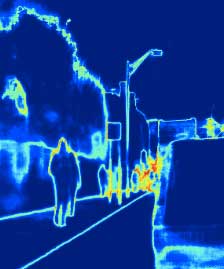

Out-of-training-distribution (OOD) scenarios are a common challenge of learning agents at deployment, typically leading to arbitrary deductions and poorly-informed decisions. In principle, detection of and adaptation to OOD scenes can mitigate their adverse effects. In this paper, we highlight the limitations of current approaches to novel driving scenes and propose an epistemic uncertainty-aware planning method, called robust imitative planning (RIP). Our method can detect and recover from some distribution shifts, reducing the overconfident and catastrophic extrapolations in OOD scenes. If the model’s uncertainty is too great to suggest a safe course of action, the model can instead query the expert driver for feedback, enabling sample-efficient online adaptation, a variant of our method we term adaptive robust imitative planning (AdaRIP). Our methods outperform current state-of-the-art approaches in the nuScenes prediction challenge, but since no be... [full abstract]

Angelos Filos, Panagiotis Tigas, Rowan McAllister, Nicholas Rhinehart, Sergey Levine, Yarin Gal

ICML, 2020

[Paper] [Code] [Website]

Uncertainty Quantification with Statistical Guarantees in End-to-End Autonomous Driving Control

Deep neural network controllers for autonomous driving have recently benefited from significant performance improvements, and have begun deployment in the real world. Prior to their widespread adoption, safety guarantees are needed on the controller behaviour that properly take account of the uncertainty within the model as well as sensor noise. Bayesian neural networks, which assume a prior over the weights, have been shown capable of producing such uncertainty measures, but properties surrounding their safety have not yet been quantified for use in autonomous driving scenarios. In this paper, we develop a framework based on a state-of-the-art simulator for evaluating end-to-end Bayesian controllers. In addition to computing pointwise uncertainty measures that can be computed in real time and with statistical guarantees, we also provide a method for estimating the probability that, given a scenario, the controller keeps the car safe within a finite horizon. We experimentally ev... [full abstract]

Rhiannon Michelmore, Matthew Wicker, Luca Laurenti, Luca Cardelli, Yarin Gal, Marta Kwiatkowska

2020 International Conference on Robotics and Automation (ICRA)

[arXiv]

Robust Imitative Planning: Planning from Demonstrations Under Uncertainty

Learning from expert demonstrations is an attractive framework for sequential decision-making in safety-critical domains such as autonomous driving, where trial and error learning has no safety guarantees during training. However, naïve use of imitation learning can fail by extrapolating incorrectly to unfamiliar situations, resulting in arbitrary model outputs and dangerous outcomes. This is especially true for high capacity parametric models such as deep neural networks, for processing high-dimensional observations from cameras or LIDAR. Instead, we model expert behaviour with a model able to capture uncertainty about previously unseen scenarios, as well as inherent stochasticity in expert demonstrations. We propose a framework for planning under epistemic uncertainty and also provide a practical realisation, called robust imitative planning (RIP), using an ensemble of deep neural density estimators. We demonstrate online robustness to out-of-training distribution scenarios on... [full abstract]

Panagiotis Tigas, Angelos Filos, Rowan McAllister, Nicholas Rhinehart, Sergey Levine, Yarin Gal

NeurIPS2019 Workshop on Machine Learning for Autonomous Driving

[Paper]

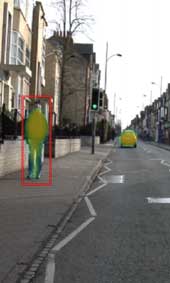

Evaluating Bayesian Deep Learning Methods for Semantic Segmentation

Deep learning has been revolutionary for computer vision and semantic segmentation in particular, with Bayesian Deep Learning (BDL) used to obtain uncertainty maps from deep models when predicting semantic classes. This information is critical when using semantic segmentation for autonomous driving for example. Standard semantic segmentation systems have well-established evaluation metrics. However, with BDL’s rising popularity in computer vision we require new metrics to evaluate whether a BDL method produces better uncertainty estimates than another method. In this work we propose three such metrics to evaluate BDL models designed specifically for the task of semantic segmentation. We modify DeepLab-v3+, one of the state-of-the-art deep neural networks, and create its Bayesian counterpart using MC dropout and Concrete dropout as inference techniques. We then compare and test these two inference techniques on the well-known Cityscapes dataset using our suggested metrics. Our re... [full abstract]

Jishnu Mukhoti, Yarin Gal

arXiv

[arXiv] [BibTex]

Evaluating Uncertainty Quantification in End-to-End Autonomous Driving Control

Self-driving has benefited from significant performance improvements with the rise of deep learning, with millions of miles having been driven with no human intervention. Despite this, crashes and erroneous behaviours still occur, in part due to the complexity of verifying the correctness of DNNs and a lack of safety guarantees. In this paper, we demonstrate how quantitative measures of uncertainty can be extracted in real-time, and their quality evaluated in end-to-end controllers for self-driving cars. We propose evaluation techniques for the uncertainty on two separate architectures which use the uncertainty to predict crashes up to five seconds in advance. We find that mutual information, a measure of uncertainty in classification networks, is a promising indicator of forthcoming crashes.

Rhiannon Michelmore, Marta Kwiatkowska, Yarin Gal

In submission

[arXiv] [BibTex]

Loss-Calibrated Approximate Inference in Bayesian Neural Networks

Current approaches in approximate inference for Bayesian neural networks minimise the Kullback-Leibler divergence to approximate the true posterior over the weights. However, this approximation is without knowledge of the final application, and therefore cannot guarantee optimal predictions for a given task. To make more suitable task-specific approximations, we introduce a new loss-calibrated evidence lower bound for Bayesian neural networks in the context of supervised learning, informed by Bayesian decision theory. By introducing a lower bound that depends on a utility function, we ensure that our approximation achieves higher utility than traditional methods for applications that have asymmetric utility functions. Furthermore, in using dropout inference, we highlight that our new objective is identical to that of standard dropout neural networks, with an additional utility-dependent penalty term. We demonstrate our new loss-calibrated model with an illustrative medical examp... [full abstract]

Adam D. Cobb, Stephen J. Roberts, Yarin Gal

Theory of deep learning workshop, ICML, 2018

[arXiv] [Code] [BibTex]

All publications

All publications Bayesian Deep Learning

Bayesian Deep Learning Deep Learning

Deep Learning Inference

Inference Data Efficient AI

Data Efficient AI Adversarial and Interpretable ML

Adversarial and Interpretable ML Autonomous Driving

Autonomous Driving

Reinforcement Learning

Reinforcement Learning Natural Language Processing

Natural Language Processing Space and Earth Observations

Space and Earth Observations Medical

Medical AI for Good and AI safety

AI for Good and AI safety