Back to all members...

Tom Rainforth

Associate Member (Faculty), started 2019

Tom is a machine learning researcher and faculty member of the Department of Statistics where he runs is own group of around 10 D.Phil students and teaches a course on Advanced Statistical Machine Learning. His research covers a wide range of topics across probabilistic machine learning, such as deep generative models, probabilistic programming, Monte Carlo methods, variational inference, and experimental design. He is an associate member of the OATML group, with whom he maintains a number of ongoing collaborations and co-supervision of students.

Publications while at OATML • News items mentioning Tom Rainforth • Reproducibility and Code • Blog Posts

Publications while at OATML:

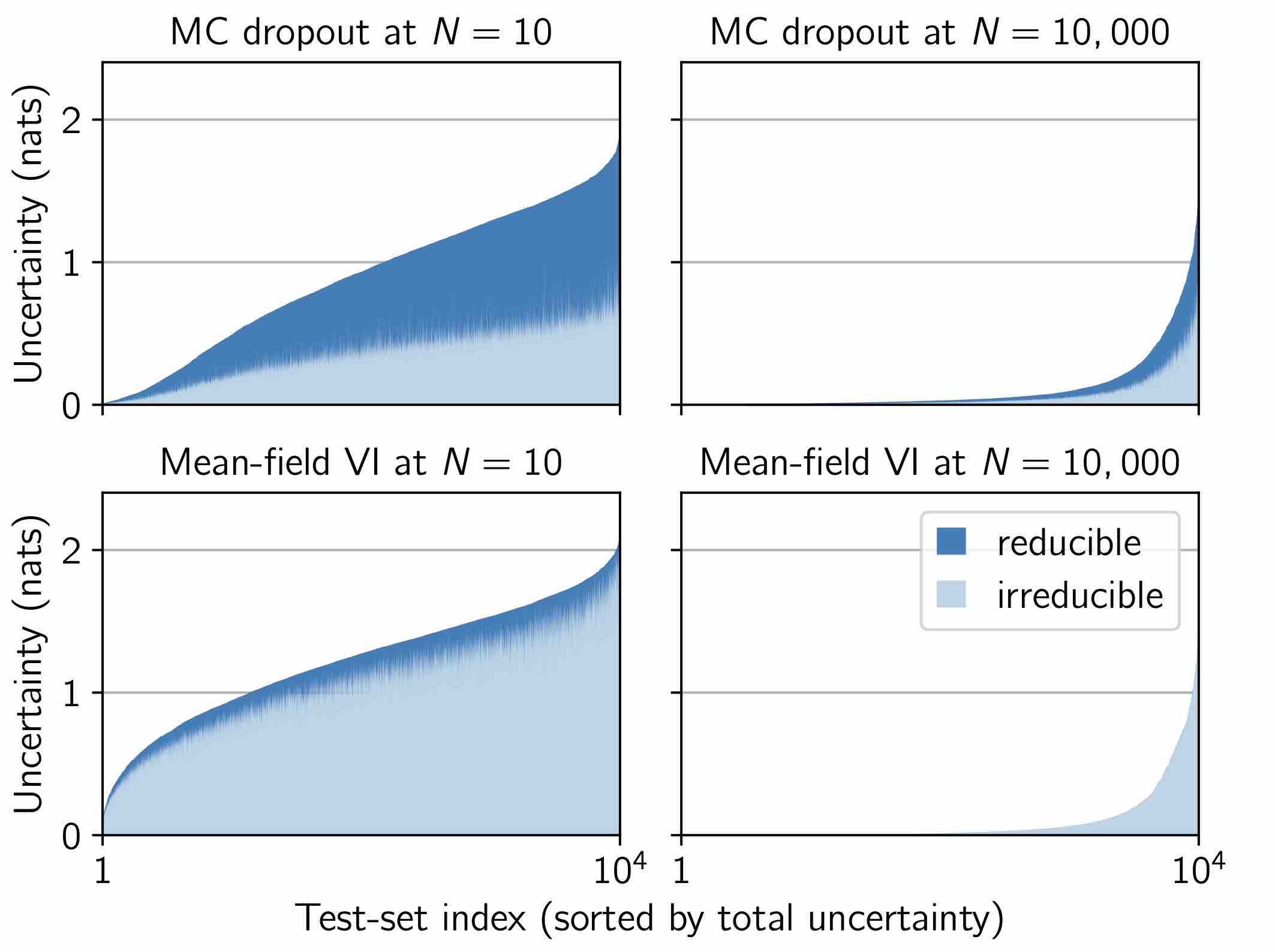

Making Better Use of Unlabelled Data in Bayesian Active Learning

Fully supervised models are predominant in Bayesian active learning. We argue that their neglect of the information present in unlabelled data harms not just predictive performance but also decisions about what data to acquire. Our proposed solution is a simple framework for semi-supervised Bayesian active learning. We find it produces better-performing models than either conventional Bayesian active learning or semi-supervised learning with randomly acquired data. It is also easier to scale up than the conventional approach. As well as supporting a shift towards semi-supervised models, our findings highlight the importance of studying models and acquisition methods in conjunction.

Freddie Bickford Smith, Adam Foster, Tom Rainforth

International Conference on Artificial Intelligence and Statistics (AISTATS), 2024

[Paper] [BibTeX]

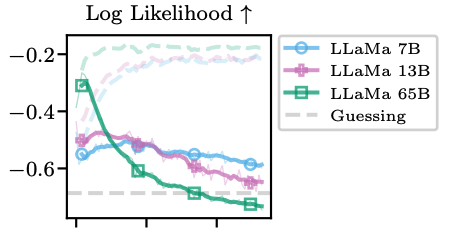

In-Context Learning Learns Label Relationships but Is Not Conventional Learning

The predictions of Large Language Models (LLMs) on downstream tasks often improve significantly when including examples of the input–label relationship in the context. However, there is currently no consensus about how this in-context learning (ICL) ability of LLMs works. For example, while Xie et al. (2022) liken ICL to a general-purpose learning algorithm, Min et al. (2022b) argue ICL does not even learn label relationships from in-context examples. In this paper, we provide novel insights into how ICL leverages label information, revealing both capabilities and limitations. To ensure we obtain a comprehensive picture of ICL behavior, we study probabilistic aspects of ICL predictions and thoroughly examine the dynamics of ICL as more examples are provided. Our experiments show that ICL predictions almost always depend on in-context labels and that ICL can learn truly novel tasks in-context. However, we also find that ICL struggles to fully overcome prediction preferences acquired... [full abstract]

Jannik Kossen, Yarin Gal, Tom Rainforth

ICLR, 2024

[OpenReview] [arXiv]

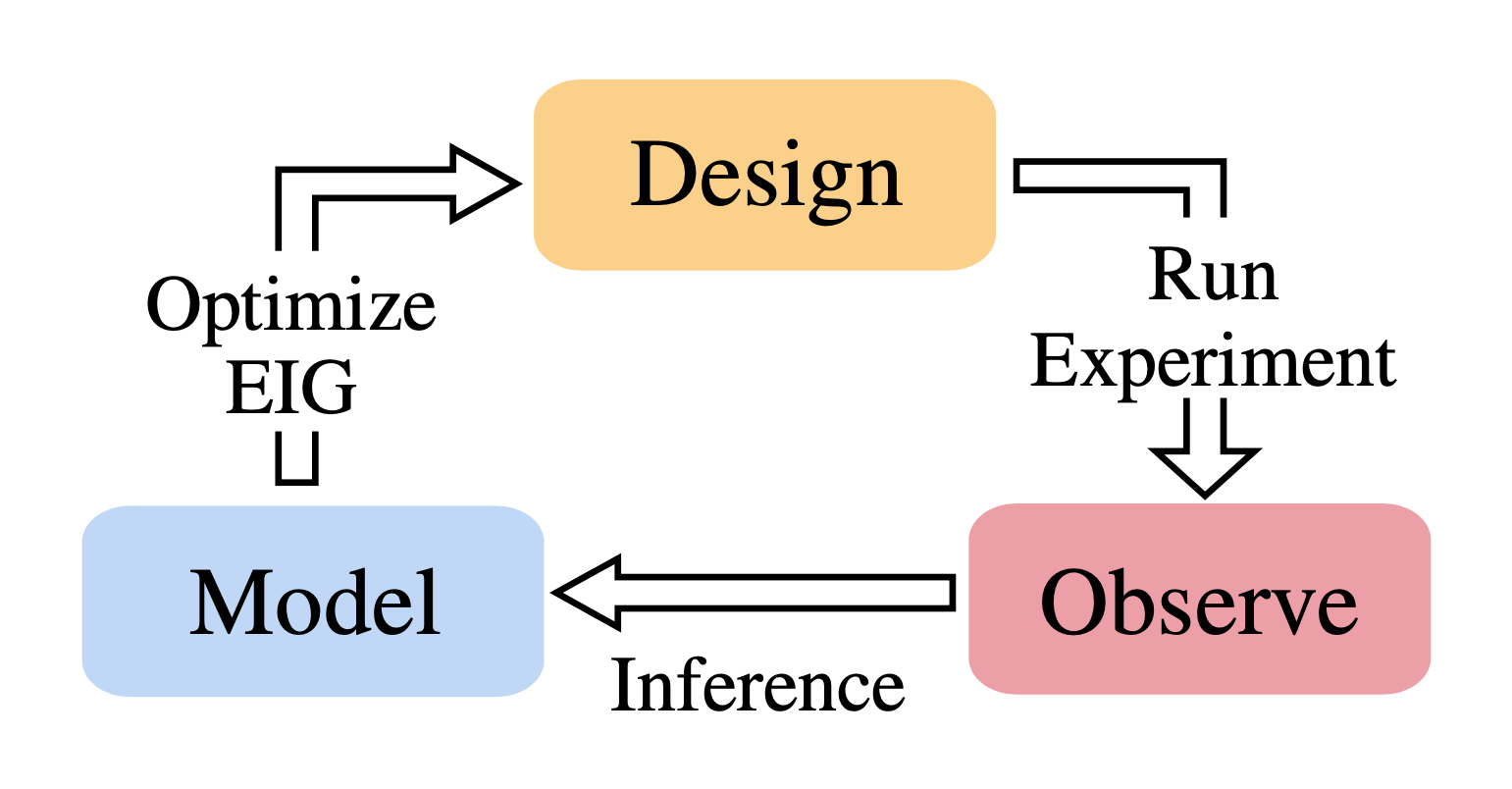

Modern Bayesian Experimental Design

Bayesian experimental design (BED) provides a powerful and general framework for optimizing the design of experiments. However, its deployment often poses substantial computational challenges that can undermine its practical use. In this review, we outline how recent advances have transformed our ability to overcome these challenges and thus utilize BED effectively, before discussing some key areas for future development in the field.

Tom Rainforth, Adam Foster, Desi R. Ivanova, Freddie Bickford Smith

Statistical Science

[Paper] [BibTeX]

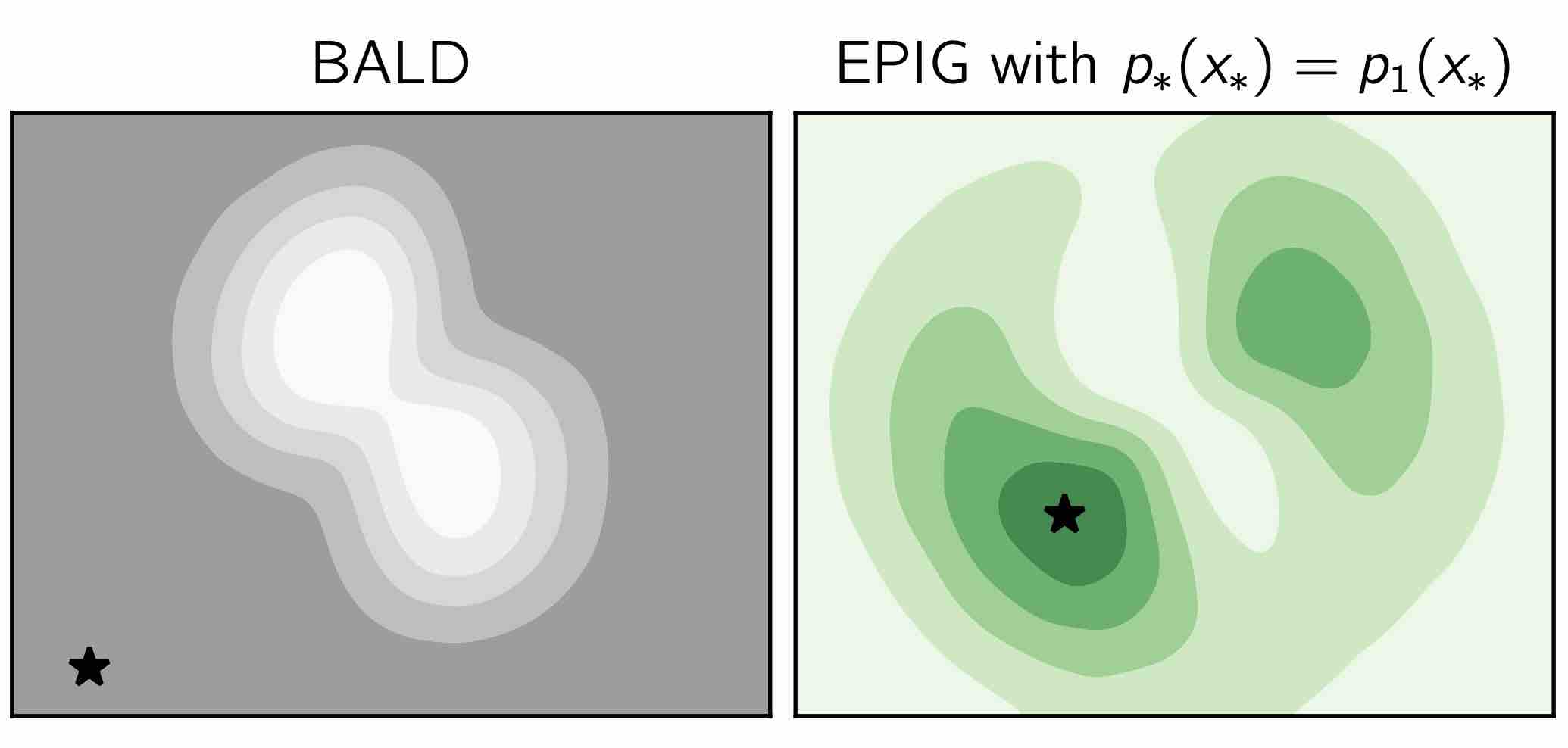

Prediction-Oriented Bayesian Active Learning

Information-theoretic approaches to active learning have traditionally focused on maximising the information gathered about the model parameters, most commonly by optimising the BALD score. We highlight that this can be suboptimal from the perspective of predictive performance. For example, BALD lacks a notion of an input distribution and so is prone to prioritise data of limited relevance. To address this we propose the expected predictive information gain (EPIG), an acquisition function that measures information gain in the space of predictions rather than parameters. We find that using EPIG leads to stronger predictive performance compared with BALD across a range of datasets and models, and thus provides an appealing drop-in replacement.

Freddie Bickford Smith, Andreas Kirsch, Sebastian Farquhar, Yarin Gal, Adam Foster, Tom Rainforth

International Conference on Artificial Intelligence and Statistics (AISTATS), 2023

[Paper] [BibTeX]

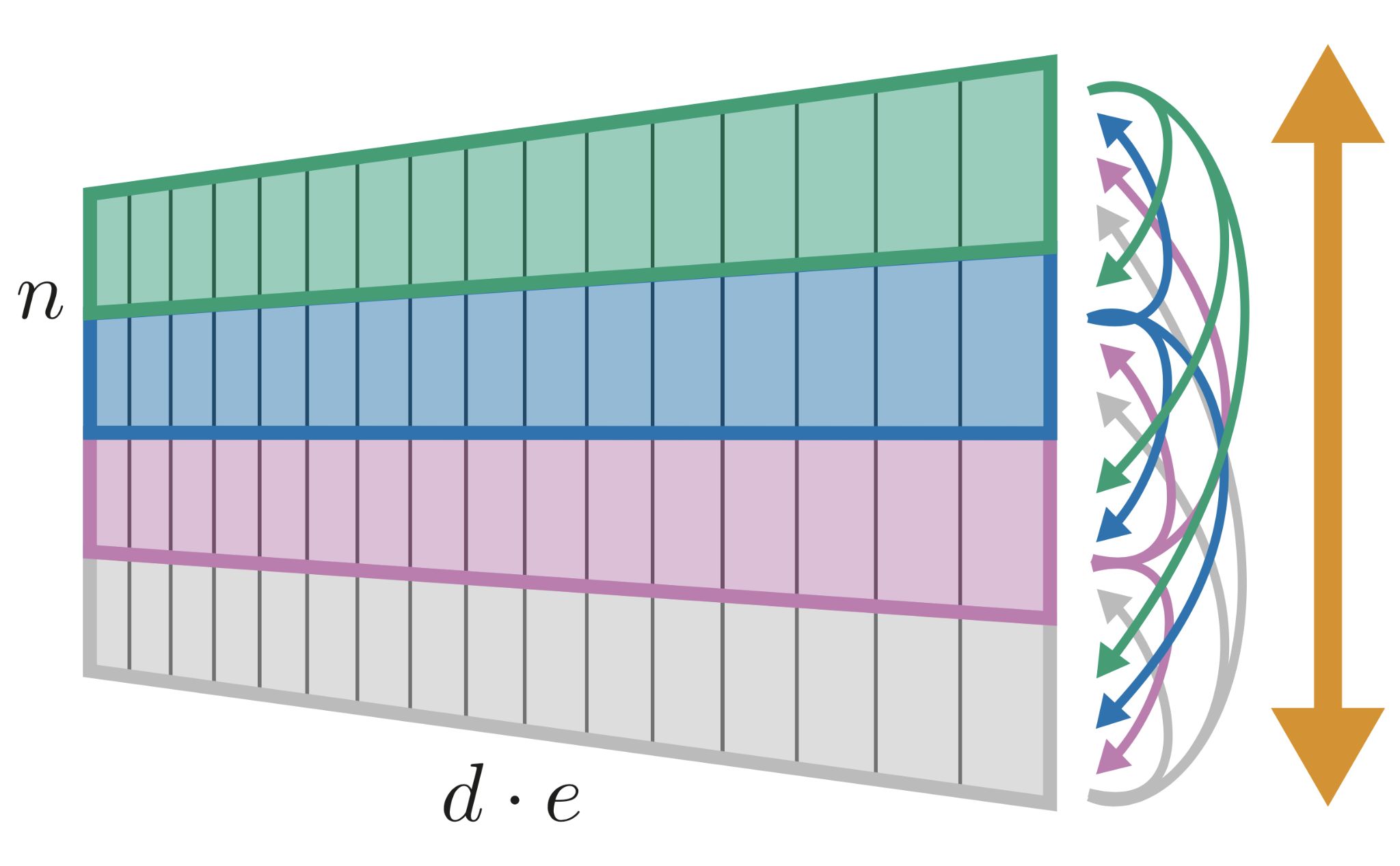

Self-Attention Between Datapoints: Going Beyond Individual Input-Output Pairs in Deep Learning

We challenge a common assumption underlying most supervised deep learning: that a model makes a prediction depending only on its parameters and the features of a single input. To this end, we introduce a general-purpose deep learning architecture that takes as input the entire dataset instead of processing one datapoint at a time. Our approach uses self-attention to reason about relationships between datapoints explicitly, which can be seen as realizing non-parametric models using parametric attention mechanisms. However, unlike conventional non-parametric models, we let the model learn end-to-end from the data how to make use of other datapoints for prediction. Empirically, our models solve cross-datapoint lookup and complex reasoning tasks unsolvable by traditional deep learning models. We show highly competitive results on tabular data, early results on CIFAR-10, and give insight into how the model makes use of the interactions between points.

Jannik Kossen, Neil Band, Clare Lyle, Aidan Gomez, Yarin Gal, Tom Rainforth

NeurIPS, 2021

[OpenReview] [arXiv] [Code]

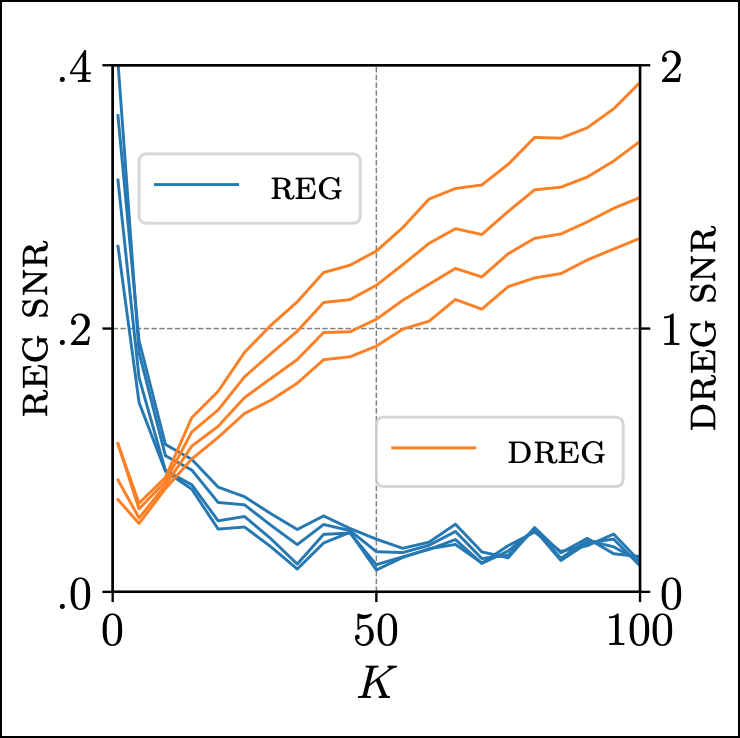

On Signal-to-Noise Ratio Issues in Variational Inference for Deep Gaussian Processes

We show that the gradient estimates used in training Deep Gaussian Processes (DGPs) with importance-weighted variational inference are susceptible to signal-to-noise ratio (SNR) issues. Specifically, we show both theoretically and empirically that the SNR of the gradient estimates for the latent variable's variational parameters decreases as the number of importance samples increases. As a result, these gradient estimates degrade to pure noise if the number of importance samples is too large. To address this pathology, we show how doubly-reparameterized gradient estimators, originally proposed for training variational autoencoders, can be adapted to the DGP setting and that the resultant estimators completely remedy the SNR issue, thereby providing more reliable training. Finally, we demonstrate that our fix can lead to improvements in the predictive performance of the model's predictive posterior.

Tim G. J. Rudner, Oscar Key, Yarin Gal, Tom Rainforth

ICML, 2021

[arXiv] [Code] [BibTex]

Active Testing: Sample-Efficient Model Evaluation

We introduce active testing: a new framework for sample-efficient model evaluation. While approaches like active learning reduce the number of labels needed for model training, existing literature largely ignores the cost of labeling test data, typically unrealistically assuming large test sets for model evaluation. This creates a disconnect to real applications where test labels are important and just as expensive, e.g. for optimizing hyperparameters. Active testing addresses this by carefully selecting the test points to label, ensuring model evaluation is sample-efficient. To this end, we derive theoretically-grounded and intuitive acquisition strategies that are specifically tailored to the goals of active testing, noting these are distinct to those of active learning. Actively selecting labels introduces a bias; we show how to remove that bias while reducing the variance of the estimator at the same time. Active testing is easy to implement, effective, and can be applied to an... [full abstract]

Jannik Kossen, Sebastian Farquhar, Yarin Gal, Tom Rainforth

ICML, 2021

[PMLR] [arXiv]

Deep Adaptive Design: Amortizing Sequential Bayesian Experimental Design

We introduce Deep Adaptive Design (DAD), a general method for amortizing the cost of performing sequential adaptive experiments using the framework of Bayesian optimal experimental design (BOED). Traditional sequential BOED approaches require substantial computational time at each stage of the experiment. This makes them unsuitable for most real-world applications, where decisions must typically be made quickly. DAD addresses this restriction by learning an amortized design network upfront and then using this to rapidly run (multiple) adaptive experiments at deployment time. This network takes as input the data from previous steps, and outputs the next design using a single forward pass; these design decisions can be made in milliseconds during the live experiment. To train the network, we introduce contrastive information bounds that are suitable objectives for the sequential setting, and propose a customized network architecture that exploits key symmetries. We demonstrate that D... [full abstract]

Adam Foster, Desi R. Ivanova, Ilyas Malik, Tom Rainforth

ICML, 2021

[arXiv]

Probabilistic Programs with Stochastic Conditioning

We tackle the problem of conditioning probabilistic programs on distributions of observable variables. Probabilistic programs are usually conditioned on samples from the joint data distribution, which we refer to as deterministic conditioning. However, in many real-life scenarios, the observations are given as marginal distributions, summary statistics, or samplers. Conventional probabilistic programming systems lack adequate means for modeling and inference in such scenarios. We propose a generalization of deterministic conditioning to stochastic conditioning, that is, conditioning on the marginal distribution of a variable taking a particular form. To this end, we first define the formal notion of stochastic conditioning and discuss its key properties. We then show how to perform inference in the presence of stochastic conditioning. We demonstrate potential usage of stochastic conditioning on several case studies which involve various kinds of stochastic conditioning and are diff... [full abstract]

David Tolpin, Yuan Zhou, Tom Rainforth, Hongseok Yang

ICML, 2021

[arXiv]

Improving VAEs' Robustness to Adversarial Attack

Variational autoencoders (VAEs) have recently been shown to be vulnerable to adversarial attacks, wherein they are fooled into reconstructing a chosen target image. However, how to defend against such attacks remains an open problem. We make significant advances in addressing this issue by introducing methods for producing adversarially robust VAEs. Namely, we first demonstrate that methods proposed to obtain disentangled latent representations produce VAEs that are more robust to these attacks. However, this robustness comes at the cost of reducing the quality of the reconstructions. We ameliorate this by applying disentangling methods to hierarchical VAEs. The resulting models produce high--fidelity autoencoders that are also adversarially robust. We confirm their capabilities on several different datasets and with current state-of-the-art VAE adversarial attacks, and also show that they increase the robustness of downstream tasks to attack.

Matthew JF Willetts, Alexander Camuto, Tom Rainforth, Steve Roberts, Christopher Holmes

ICLR, 2021

[Paper]

Capturing Label Characteristics in VAEs

We present a principled approach to incorporating labels in variational autoencoders (VAEs) that captures the rich characteristic information associated with those labels. While prior work has typically conflated these by learning latent variables that directly correspond to label values, we argue this is contrary to the intended effect of supervision in VAEs—capturing rich label characteristics with the latents. For example, we may want to capture the characteristics of a face that make it look young, rather than just the age of the person. To this end, we develop a novel VAE model, the characteristic capturing VAE (CCVAE), which “reparameterizes” supervision through auxiliary variables and a concomitant variational objective. Through judicious structuring of mappings between latent and auxiliary variables, we show that the CCVAE can effectively learn meaningful representations of the characteristics of interest across a variety of supervision schemes. In particular, we show that ... [full abstract]

Tom Joy, Sebastian Schmon, Philip Torr, Siddharth N, Tom Rainforth

ICLR, 2021

[Paper]

Improving Transformation Invariance in Contrastive Representation Learning

We propose methods to strengthen the invariance properties of representations obtained by contrastive learning. While existing approaches implicitly induce a degree of invariance as representations are learned, we look to more directly enforce invariance in the encoding process. To this end, we first introduce a training objective for contrastive learning that uses a novel regularizer to control how the representation changes under transformation. We show that representations trained with this objective perform better on downstream tasks and are more robust to the introduction of nuisance transformations at test time. Second, we propose a change to how test time representations are generated by introducing a feature averaging approach that combines encodings from multiple transformations of the original input, finding that this leads to across the board performance gains. Finally, we introduce the novel Spirograph dataset to explore our ideas in the context of a differentiable gene... [full abstract]

Adam Foster, Rattana Pukdee, Tom Rainforth

ICLR, 2021

[Paper]

On Statistical Bias In Active Learning: How and When to Fix It

Active learning is a powerful tool when labelling data is expensive, but it introduces a bias because the training data no longer follows the population distribution. We formalize this bias and investigate the situations in which it can be harmful and sometimes even helpful. We further introduce novel corrective weights to remove bias when doing so is beneficial. Through this, our work not only provides a useful mechanism that can improve the active learning approach, but also an explanation for the empirical successes of various existing approaches which ignore this bias. In particular, we show that this bias can be actively helpful when training overparameterized models---like neural networks---with relatively modest dataset sizes.

Sebastian Farquhar, Yarin Gal, Tom Rainforth

ICLR, 2021 (Spotlight)

[Paper]

Divide, Conquer, and Combine: a New Inference Strategy for Probabilistic Programs with Stochastic Support

Universal probabilistic programming systems (PPSs) provide a powerful framework for specifying rich and complex probabilistic models. They further attempt to automate the process of drawing inferences from these models, but doing this successfully is severely hampered by the wide range of non--standard models they can express. As a result, although one can specify complex models in a universal PPS, the provided inference engines often fall far short of what is required. In particular, we show they produce surprisingly unsatisfactory performance for models where the support may vary between executions, often doing no better than importance sampling from the prior. To address this, we introduce a new inference framework: Divide, Conquer, and Combine, which remains efficient for such models, and show how it can be implemented as an automated and general-purpose PPS inference engine. We empirically demonstrate substantial performance improvements over existing approaches on two examples. [full abstract]

Yuan Zhou, Hongseok Yang, Yee Whye Teh, Tom Rainforth

ICML, 2020

[Paper]

On the Benefits of Disentangled Representations

Recently there has been a significant interest in learning disentangled representations, as they promise increased interpretability, generalization to unseen scenarios and faster learning on downstream tasks. In this paper, we investigate the usefulness of different notions of disentanglement for improving the fairness of downstream prediction tasks based on representations. We consider the setting where the goal is to predict a target variable based on the learned representation of high-dimensional observations (such as images) that depend on both the target variable and an unobserved sensitive variable. We show that in this setting both the optimal and empirical predictions can be unfair, even if the target variable and the sensitive variable are independent. Analyzing more than 12600 trained representations of state-of-the-art disentangled models, we observe that various disentanglement scores are consistently correlated with increased fairness, suggesting that disentanglement m... [full abstract]

Francesco Locatello, Gabriele Abbati, Tom Rainforth, Stefan Bauer, Bernhard Schölkopf, Olivier Bachem

NeurIPS, 2019

[arXiv]

Variational Bayesian Optimal Experimental Design

Bayesian optimal experimental design (BOED) is a principled framework for making efficient use of limited experimental resources. Unfortunately, its applicability is hampered by the difficulty of obtaining accurate estimates of the expected information gain (EIG) of an experiment. To address this, we introduce several classes of fast EIG estimators by building on ideas from amortized variational inference. We show theoretically and empirically that these estimators can provide significant gains in speed and accuracy over previous approaches. We further demonstrate the practicality of our approach on a number of end-to-end experiments.

Adam Foster, Martin Jankowiak, Eli Bingham, Paul Horsfall, Yee Whye Teh, Tom Rainforth, Noah Goodman

NeurIPS, 2019

[arXiv]

News items mentioning Tom Rainforth:

OATML researchers to present at Stanford University Lecture Course CS25: Transformers United

22 Aug 2021

OATML graduate students Aidan Gomez, Jannik Kossen, and Neil Band will be presenting their recent paper Self-Attention Between Datapoints: Going Beyond Individual Input-Output Pairs in Deep Learning that introduces Non-Parametric Transformers at the Stanford Lecture Course ‘CS25: Transformers United’ on November 1, 2021. Professor Yarin Gal, Dr. Tom Rainforth, and OATML DPhil student Clare Lyle are co-authors on the paper.

The lecture is available online here.

OATML researchers to speak at Google Research

22 Aug 2021

OATML students Jannik Kossen and Neil Band will be presenting their recent paper Self-Attention Between Datapoints: Going Beyond Individual Input-Output Pairs in Deep Learning at Google Research on September 14, 2021. Professor Yarin Gal, Dr. Tom Rainforth, and OATML DPhil students Clare Lyle and Aidan Gomez are co-authors on the paper.

OATML researcher presents at AI Campus Berlin

06 Aug 2021

OATML DPhil student Jannik Kossen gives invited talks at AI Campus Berlin on two recent papers: Self-Attention Between Datapoints: Going Beyond Individual Input-Output Pairs in Deep Learning and Active Testing: Sample-Efficient Model Evaluation. Recordings of are available upon request. Announcements are here and here. Professor Yarin Gal, Dr. Tom Rainforth, and OATML graduate students Sebastian Farquhar, Neil Band, Clare Lyle, and Aidan Gomez are co-authors on the papers.

ICML 2021

17 Jul 2021

Seven papers with OATML members accepted to ICML 2021, together with 14 workshop papers. More information in our blog post.

OATML researchers to speak at Cohere

09 Jul 2021

OATML students Jannik Kossen and Neil Band present their recent paper Self-Attention Between Datapoints: Going Beyond Individual Input-Output Pairs in Deep Learning at Cohere on July 9, 2021. Professor Yarin Gal, Dr. Tom Rainforth, and OATML DPhil students Clare Lyle and Aidan Gomez are also co-authors on the paper.

Blog Posts

21 OATML Conference and Workshop papers at ICML 2021

OATML group members and collaborators are proud to present 21 papers at ICML 2021, including 7 papers at the main conference and 14 papers at various workshops. Group members will also be giving invited talks and participate in panel discussions at the workshops. …

Full post...Angelos Filos, Clare Lyle, Jannik Kossen, Sebastian Farquhar, Tom Rainforth, Andrew Jesson, Sören Mindermann, Tim G. J. Rudner, Oscar Key, Binxin (Robin) Ru, Pascal Notin, Panagiotis Tigas, Andreas Kirsch, Jishnu Mukhoti, Joost van Amersfoort, Lisa Schut, Muhammed Razzak, Aidan Gomez, Jan Brauner, Yarin Gal, 17 Jul 2021

13 OATML Conference and Workshop papers at ICML 2020

We are glad to share the following 13 papers by OATML authors and collaborators to be presented at this ICML conference and workshops …

Full post...Angelos Filos, Sebastian Farquhar, Tim G. J. Rudner, Lewis Smith, Lisa Schut, Tom Rainforth, Panagiotis Tigas, Pascal Notin, Andreas Kirsch, Clare Lyle, Joost van Amersfoort, Jishnu Mukhoti, Yarin Gal, 10 Jul 2020

25 OATML Conference and Workshop papers at NeurIPS 2019

We are glad to share the following 25 papers by OATML authors and collaborators to be presented at this NeurIPS conference and workshops. …

Full post...Angelos Filos, Sebastian Farquhar, Aidan Gomez, Tim G. J. Rudner, Zac Kenton, Lewis Smith, Milad Alizadeh, Tom Rainforth, Panagiotis Tigas, Andreas Kirsch, Clare Lyle, Joost van Amersfoort, Yarin Gal, 08 Dec 2019